*/

Discriminatory algorithms, AI hallucinations and data privacy – Sara Ibrahim looks at the key risks for lawyers

People could be forgiven for finding the current debate around artificial intelligence, and generative AI in particular, overly fraught. Depending on the enthusiasm of the proponents for AI, it is either advocated as a cure-all for human failings or a threat to workers, including lawyers.

Generative AI has attracted the most attention, especially ChatGPT, because it is able to generate natural language answers to complex questions. The increasing use of AI by clients and the courts means that lawyers would be well advised to understand the potential ‘pratfalls’ and advantages of integrating AI into their practices.

Debate is centring around calls for more and faster regulation. The pace of regulatory change to date has been slow and even the much-awaited EU Artificial Intelligence Act is not expected to come into force until the end of 2023, with a number of provisions applying 24 months after this. In the UK a white paper has been released with the aim of reporting on government’s proposed next steps by the autumn (A pro-innovation approach to AI regulation, Department for Science, Innovation and Technology, March 2023). EU, US and UK proposals do share some common threads including the need to safeguard data privacy and to combat discrimination found in data sets used to train large language AI models or in algorithms.

Over the pandemic many barristers’ practices were fundamentally reshaped by technology, from the adoption of electronic bundles to the rise in remote hearings. Those at the Commercial Bar will have witnessed the prevalence of disclosure software to streamline litigation and be more cost effective.

In February this year, Luminance, an AI document search tool, was used in an Old Bailey murder trial. The criminal defence barrister called on the Ministry of Justice to invest in the technology to save time spent manually searching through thousands of pages of disclosure (see ‘Robot laws: First barrister to use AI in Old Bailey murder trial urges government to push forward cutting edge tech’, Louis Goss, City AM, 7 February 2023). There are already tangible benefits for those who have adapted their ways of working to benefit from technology. Further changes will come. For those at the start of their practice, familiarity with AI and how it can be used will become essential.

On the solicitor side of the profession, there have been some enthusiastic adopters of AI tools. Around 3,500 Allen & Overy lawyers have access to Harvey, an AI platform built with OpenAI’s latest models, according to the Financial Times (‘AI shakes up way we work in three key industries’ Sarah O’Connor, Christopher Grimes and Cristina Criddle, Financial Times, 18 June 2023).

The tool is being used to save time on tasks traditionally assigned to junior members of staff. Earlier this year, Mishcon de Reya announced they were recruiting for a dedicated engineer to explore ‘how generative AI can be used within a law firm, including its application to legal practice tasks and wider law firm business tasks’. In line with previous waves of change, barristers will inevitably be nudged by solicitors’ demands for AI literacy in counsel they instruct.

One of the presumed benefits of AI other than productivity is its infallibility. Contrary to humans who can be erratic and biased in their decision-making, AI is assumed to be dependable. This often leads to widescale and uncritical adoption of AI. Algorithms have already been demonstrated to exhibit bias in financial services by denying credit on race or gender grounds. This can either originate in data sets that import pre-existing biases of previous human decision makers or by using incomplete or skewed data sets. These feedback loops can be far more dangerous than when applied by a person alone as an AI or algorithm can apply discriminatory criteria at scale.

Lord Sales, Justice of the UK Supreme Court, gave a keynote address to the Information Law Conference in April examining algorithmic use by the government (‘Information law and automated governance’, 24 April 2023). He mentioned use of facial recognition technology considered in R (Bridges) v Chief Constable of South Wales [2020] EWCA Civ 1058. One of the factors determined by the Court of Appeal in that case was whether the Police had breached their Public Sector Equality Duty (‘PSED’) under s 149 of the Equality Act, specifically if the facial recognition used was biased on the grounds of race or sex. It was held that the police force had not done all it reasonably could to fulfil their PSED. Although the PSED is a public duty only, the broader Equality Act obligations to not discriminate apply to regulated bodies like service providers, including certain associations and clubs (see Equality Act guidance, Human Rights Commission). In the speech, the terminology of ‘human in the loop’ was canvassed as a way of ensuring algorithmic bias was identified and rooted out.

Barristers should be mindful of their core duties, including CD8 of the Code of Conduct not to discriminate. Under rC12 this includes a duty not to discriminate against a person ‘on the grounds of race, colour, ethnic or national origin, nationality, citizenship, sex, gender re-assignment, sexual orientation, marital or civil partnership status, disability, age, religion or belief, or pregnancy and maternity’. This could foreseeably apply where a chambers is using ‘biased’ AI to help make decisions such as who to recruit or how to allocate work. More fundamentally, under rC19, barristers need to be clear who is legally responsible for the provision of services and the terms on which those legal services are supplied. It might therefore be good practice to alert clients where AI tools are used and to any human oversight or checks on those tools.

A key risk factor for lawyers is when the AI will fabricate information – so-called ‘hallucinations’. This has already occurred in a court setting in both the UK and US. A New York attorney used generative AI to help identify case law and used the results in his filings in a personal injury claim (Lawyer’s AI Blunder Shows Perils of ChatGPT in ‘Early Days’, Justin Wise, Bloomberg Law, 31 May 2023). Case law, including citations, had been fabricated by the AI tool and had not been verified by the attorney. When this became apparent, the court ordered both the attorney and his firm to show cause as to why they should not be sanctioned.

In May it was reported that a litigant in person in a civil case in Manchester provided four case citations after consulting ChatGPT. While three of the four cases were real, the cited passages did not set out the principles contended (‘LiP presents false citations to court after asking ChatGPT’, John Hyde, Law Gazette, 29 May 2023). Although the judge found that there was no intention to mislead, caution should be used when novel points and unfamiliar authorities are referred to by AI.

The Code of Conduct would presumably capture barristers who cited fabricated case law for recklessly misleading the court. Barristers using AI in any legal research would be well advised to confirm the veracity of any material generated by ChatGPT or equivalent tools.

Most lawyers are familiar with the UK General Data Protection Regulation and need for data security. The Information Commissioner’s Office (ICO) has recently provided helpful guidance that makes it clear that fairness in data protection law can encompass ‘AI driven discrimination’ (see ‘What about fairness, bias and discrimination?’ ICO). This guidance reminds readers that certain protected characteristics can also amount to special category data such as disability information if it touches upon health. Further, the use of combined data sets by machine learning tools might mean that special category data is inferred. It should be anticipated that the ICO will use its powers to ensure that data is accurate and AI tools are not processing that data in a discriminatory way.

Lawyers who properly understand the benefits and limitations of AI will gain in the new legal landscape.

People could be forgiven for finding the current debate around artificial intelligence, and generative AI in particular, overly fraught. Depending on the enthusiasm of the proponents for AI, it is either advocated as a cure-all for human failings or a threat to workers, including lawyers.

Generative AI has attracted the most attention, especially ChatGPT, because it is able to generate natural language answers to complex questions. The increasing use of AI by clients and the courts means that lawyers would be well advised to understand the potential ‘pratfalls’ and advantages of integrating AI into their practices.

Debate is centring around calls for more and faster regulation. The pace of regulatory change to date has been slow and even the much-awaited EU Artificial Intelligence Act is not expected to come into force until the end of 2023, with a number of provisions applying 24 months after this. In the UK a white paper has been released with the aim of reporting on government’s proposed next steps by the autumn (A pro-innovation approach to AI regulation, Department for Science, Innovation and Technology, March 2023). EU, US and UK proposals do share some common threads including the need to safeguard data privacy and to combat discrimination found in data sets used to train large language AI models or in algorithms.

Over the pandemic many barristers’ practices were fundamentally reshaped by technology, from the adoption of electronic bundles to the rise in remote hearings. Those at the Commercial Bar will have witnessed the prevalence of disclosure software to streamline litigation and be more cost effective.

In February this year, Luminance, an AI document search tool, was used in an Old Bailey murder trial. The criminal defence barrister called on the Ministry of Justice to invest in the technology to save time spent manually searching through thousands of pages of disclosure (see ‘Robot laws: First barrister to use AI in Old Bailey murder trial urges government to push forward cutting edge tech’, Louis Goss, City AM, 7 February 2023). There are already tangible benefits for those who have adapted their ways of working to benefit from technology. Further changes will come. For those at the start of their practice, familiarity with AI and how it can be used will become essential.

On the solicitor side of the profession, there have been some enthusiastic adopters of AI tools. Around 3,500 Allen & Overy lawyers have access to Harvey, an AI platform built with OpenAI’s latest models, according to the Financial Times (‘AI shakes up way we work in three key industries’ Sarah O’Connor, Christopher Grimes and Cristina Criddle, Financial Times, 18 June 2023).

The tool is being used to save time on tasks traditionally assigned to junior members of staff. Earlier this year, Mishcon de Reya announced they were recruiting for a dedicated engineer to explore ‘how generative AI can be used within a law firm, including its application to legal practice tasks and wider law firm business tasks’. In line with previous waves of change, barristers will inevitably be nudged by solicitors’ demands for AI literacy in counsel they instruct.

One of the presumed benefits of AI other than productivity is its infallibility. Contrary to humans who can be erratic and biased in their decision-making, AI is assumed to be dependable. This often leads to widescale and uncritical adoption of AI. Algorithms have already been demonstrated to exhibit bias in financial services by denying credit on race or gender grounds. This can either originate in data sets that import pre-existing biases of previous human decision makers or by using incomplete or skewed data sets. These feedback loops can be far more dangerous than when applied by a person alone as an AI or algorithm can apply discriminatory criteria at scale.

Lord Sales, Justice of the UK Supreme Court, gave a keynote address to the Information Law Conference in April examining algorithmic use by the government (‘Information law and automated governance’, 24 April 2023). He mentioned use of facial recognition technology considered in R (Bridges) v Chief Constable of South Wales [2020] EWCA Civ 1058. One of the factors determined by the Court of Appeal in that case was whether the Police had breached their Public Sector Equality Duty (‘PSED’) under s 149 of the Equality Act, specifically if the facial recognition used was biased on the grounds of race or sex. It was held that the police force had not done all it reasonably could to fulfil their PSED. Although the PSED is a public duty only, the broader Equality Act obligations to not discriminate apply to regulated bodies like service providers, including certain associations and clubs (see Equality Act guidance, Human Rights Commission). In the speech, the terminology of ‘human in the loop’ was canvassed as a way of ensuring algorithmic bias was identified and rooted out.

Barristers should be mindful of their core duties, including CD8 of the Code of Conduct not to discriminate. Under rC12 this includes a duty not to discriminate against a person ‘on the grounds of race, colour, ethnic or national origin, nationality, citizenship, sex, gender re-assignment, sexual orientation, marital or civil partnership status, disability, age, religion or belief, or pregnancy and maternity’. This could foreseeably apply where a chambers is using ‘biased’ AI to help make decisions such as who to recruit or how to allocate work. More fundamentally, under rC19, barristers need to be clear who is legally responsible for the provision of services and the terms on which those legal services are supplied. It might therefore be good practice to alert clients where AI tools are used and to any human oversight or checks on those tools.

A key risk factor for lawyers is when the AI will fabricate information – so-called ‘hallucinations’. This has already occurred in a court setting in both the UK and US. A New York attorney used generative AI to help identify case law and used the results in his filings in a personal injury claim (Lawyer’s AI Blunder Shows Perils of ChatGPT in ‘Early Days’, Justin Wise, Bloomberg Law, 31 May 2023). Case law, including citations, had been fabricated by the AI tool and had not been verified by the attorney. When this became apparent, the court ordered both the attorney and his firm to show cause as to why they should not be sanctioned.

In May it was reported that a litigant in person in a civil case in Manchester provided four case citations after consulting ChatGPT. While three of the four cases were real, the cited passages did not set out the principles contended (‘LiP presents false citations to court after asking ChatGPT’, John Hyde, Law Gazette, 29 May 2023). Although the judge found that there was no intention to mislead, caution should be used when novel points and unfamiliar authorities are referred to by AI.

The Code of Conduct would presumably capture barristers who cited fabricated case law for recklessly misleading the court. Barristers using AI in any legal research would be well advised to confirm the veracity of any material generated by ChatGPT or equivalent tools.

Most lawyers are familiar with the UK General Data Protection Regulation and need for data security. The Information Commissioner’s Office (ICO) has recently provided helpful guidance that makes it clear that fairness in data protection law can encompass ‘AI driven discrimination’ (see ‘What about fairness, bias and discrimination?’ ICO). This guidance reminds readers that certain protected characteristics can also amount to special category data such as disability information if it touches upon health. Further, the use of combined data sets by machine learning tools might mean that special category data is inferred. It should be anticipated that the ICO will use its powers to ensure that data is accurate and AI tools are not processing that data in a discriminatory way.

Lawyers who properly understand the benefits and limitations of AI will gain in the new legal landscape.

Discriminatory algorithms, AI hallucinations and data privacy – Sara Ibrahim looks at the key risks for lawyers

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

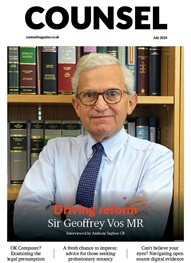

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts