*/

Andrew Goddard KC and Laura Hussey consider the new skills barristers will need to become the legal superstars of the future

Civil litigation is an ever-evolving creature, but, until recently, the progressive and procedural changes were motivated by what might be termed internal forces. That is to say, they sprang from a review of the courts’ own procedures, by both lawyers and judges, concerned to see if the existing procedures required updating to improve efficiency without adversely affecting the quality of the litigation process, and, indeed, with the intention of improving it. Examples which spring to mind include written openings, witness statements and lists of issues served in advance of a trial. All of which, when they were first proposed seemed revolutionary, but which we now take for granted.

We are currently at the dawn of the next revolutionary change in civil litigation. However, unlike its predecessors, this change comes from an external force which has the capacity to alter the litigation process and legal landscape more radically than we may be able to imagine. That external force can be summed up by two letters: AI.

Many conclude that it is inevitable that just as the Industrial Revolution drastically changed the world so too will AI. Yet, despite this inevitability, for many barristers (possibly most) AI is something about which there is little more than a sketchy understanding. Few of us understand to any significant level of detail how large language models (LLM) work or how algorithms are modelled and then learn, so that AI can reach conclusions or offer predictions.

Many algorithms now used in legal practice are smarter than human beings. They can assimilate and interrogate volumes of data that the human brain cannot; see patterns and trends to which the human brain is oblivious; and do so in a fraction of the time that human efforts would take. And at a fraction of the cost.

Basic legal research, such as searching for a topic-specific document or authorities considering a point of law or a particular standard form of contract provision is where the most obvious but least ground-breaking gains are being made. While AI will take this capability a huge leap forward it likely won’t change the nature of the task any more than Lexis Nexis changed the nature of legal research. These systems involve a low level of AI, and essentially provide the automation of a manual process.

The real leap up is that lawyers are using and will increasingly use (as its reliability and functionality increases) an LLM system to inform the advice given to a client or a submission made to court or arbitral tribunal. When input with the relevant facts and parameters specific to a real case, AI can provide its prediction of the likely resolution or outcome of the issue. It can also justify and explain its reasoning by reference, inter alia, to case law and what the AI considers to be, for example, trends in judicial decision making.

However, it needs to be appreciated that an LLM system is only as good as the linguistic data upon which it relies. LLMs must be trained, and the more up-to-date and authoritative data that they have, the more useful they are likely to be. But databases do not contain everything, and barristers will need to consider the limitations of the linguistic data the LLM system is relying upon.

Another consideration is that in order to have the LLM system return the most helpful and pertinent information, the right prompts must be deployed. As everyone who uses Google knows, how you phrase a search query can significantly alter the results which are returned. If a prompt is going to be used to generate advice and submissions, a new critical talent for barristers is likely to be understanding how prompts work and how best to compose them. This involves gaining an understanding of how the algorithms work and learn. Not easy; but in the future, surely necessary.

Perhaps, the most significant risk to consider is that AI based upon LLM technology can ‘hallucinate’. A new term of such importance that it is Cambridge Dictionary’s Word of the Year 2023 being explained thus:

When an artificial intelligence hallucinates, it produces false information... AI tools, especially those using large language models (LLMs), have proven capable of generating plausible prose, but they often do so using false, misleading or made-up ‘facts’. They ‘hallucinate’ in a confident and sometimes believable manner.

Although the problem of hallucinations is likely to be solved in the long term, until it is barristers embracing AI need to learn to sift through potential hallucinations and to fill in any gaps left behind.

And what about the next leap? AI will be used to assist in advocacy, not only helping to draft opening and closing submissions but also preparing cross-examination, and with the ability to cover endless permutations of how the witness may answer.

Despite the possible risks, it is not only evident that AI is here to stay and is going to change the world, but it is something the legal profession should look to embrace for all the benefits it has and will bring. Many of the top solicitors’ firms are already investing in their own bespoke AI. But consider the lawyer who does not use the available technology, and so misses a line of argument that the AI would have suggested, and which might have won the argument in court? Or the lawyer who does not use the available technology and so does not receive the AI’s prediction that the case would be likely to fail, and who ploughs on to lose in court at great cost to their client?

To paraphrase Donald Rumsfeld, we are in the realms of both ‘known unknowns’ and ‘unknown unknowns’ when it comes to AI and litigation. What is known, is that AI is here to stay. What may not yet be known, but which appears to some very likely, is that the legal superstars of the future may no longer be those with the best ‘legal skills’ as we know them now, but instead those who embrace AI, while recognising its risks.

Perhaps it will be those who are best at understanding how AI works, creating the most useful prompts, being able to sift out hallucinations and knowing how to combine this technical know-how with the human qualities which are still so important to our profession (and which cannot be fulfilled by AI) who will be the future legal superstars.

If it has not happened already, it surely cannot be long before law degree courses offer an understanding of AI as part of the curriculum. It may even become as mandatory as contract and tort in the professional examinations. There are, of course, numerous online courses and tutorials giving insight into how LLMs and algorithms work and can be made to work better. Some of you have already signed up… may the force be with you.

Civil litigation is an ever-evolving creature, but, until recently, the progressive and procedural changes were motivated by what might be termed internal forces. That is to say, they sprang from a review of the courts’ own procedures, by both lawyers and judges, concerned to see if the existing procedures required updating to improve efficiency without adversely affecting the quality of the litigation process, and, indeed, with the intention of improving it. Examples which spring to mind include written openings, witness statements and lists of issues served in advance of a trial. All of which, when they were first proposed seemed revolutionary, but which we now take for granted.

We are currently at the dawn of the next revolutionary change in civil litigation. However, unlike its predecessors, this change comes from an external force which has the capacity to alter the litigation process and legal landscape more radically than we may be able to imagine. That external force can be summed up by two letters: AI.

Many conclude that it is inevitable that just as the Industrial Revolution drastically changed the world so too will AI. Yet, despite this inevitability, for many barristers (possibly most) AI is something about which there is little more than a sketchy understanding. Few of us understand to any significant level of detail how large language models (LLM) work or how algorithms are modelled and then learn, so that AI can reach conclusions or offer predictions.

Many algorithms now used in legal practice are smarter than human beings. They can assimilate and interrogate volumes of data that the human brain cannot; see patterns and trends to which the human brain is oblivious; and do so in a fraction of the time that human efforts would take. And at a fraction of the cost.

Basic legal research, such as searching for a topic-specific document or authorities considering a point of law or a particular standard form of contract provision is where the most obvious but least ground-breaking gains are being made. While AI will take this capability a huge leap forward it likely won’t change the nature of the task any more than Lexis Nexis changed the nature of legal research. These systems involve a low level of AI, and essentially provide the automation of a manual process.

The real leap up is that lawyers are using and will increasingly use (as its reliability and functionality increases) an LLM system to inform the advice given to a client or a submission made to court or arbitral tribunal. When input with the relevant facts and parameters specific to a real case, AI can provide its prediction of the likely resolution or outcome of the issue. It can also justify and explain its reasoning by reference, inter alia, to case law and what the AI considers to be, for example, trends in judicial decision making.

However, it needs to be appreciated that an LLM system is only as good as the linguistic data upon which it relies. LLMs must be trained, and the more up-to-date and authoritative data that they have, the more useful they are likely to be. But databases do not contain everything, and barristers will need to consider the limitations of the linguistic data the LLM system is relying upon.

Another consideration is that in order to have the LLM system return the most helpful and pertinent information, the right prompts must be deployed. As everyone who uses Google knows, how you phrase a search query can significantly alter the results which are returned. If a prompt is going to be used to generate advice and submissions, a new critical talent for barristers is likely to be understanding how prompts work and how best to compose them. This involves gaining an understanding of how the algorithms work and learn. Not easy; but in the future, surely necessary.

Perhaps, the most significant risk to consider is that AI based upon LLM technology can ‘hallucinate’. A new term of such importance that it is Cambridge Dictionary’s Word of the Year 2023 being explained thus:

When an artificial intelligence hallucinates, it produces false information... AI tools, especially those using large language models (LLMs), have proven capable of generating plausible prose, but they often do so using false, misleading or made-up ‘facts’. They ‘hallucinate’ in a confident and sometimes believable manner.

Although the problem of hallucinations is likely to be solved in the long term, until it is barristers embracing AI need to learn to sift through potential hallucinations and to fill in any gaps left behind.

And what about the next leap? AI will be used to assist in advocacy, not only helping to draft opening and closing submissions but also preparing cross-examination, and with the ability to cover endless permutations of how the witness may answer.

Despite the possible risks, it is not only evident that AI is here to stay and is going to change the world, but it is something the legal profession should look to embrace for all the benefits it has and will bring. Many of the top solicitors’ firms are already investing in their own bespoke AI. But consider the lawyer who does not use the available technology, and so misses a line of argument that the AI would have suggested, and which might have won the argument in court? Or the lawyer who does not use the available technology and so does not receive the AI’s prediction that the case would be likely to fail, and who ploughs on to lose in court at great cost to their client?

To paraphrase Donald Rumsfeld, we are in the realms of both ‘known unknowns’ and ‘unknown unknowns’ when it comes to AI and litigation. What is known, is that AI is here to stay. What may not yet be known, but which appears to some very likely, is that the legal superstars of the future may no longer be those with the best ‘legal skills’ as we know them now, but instead those who embrace AI, while recognising its risks.

Perhaps it will be those who are best at understanding how AI works, creating the most useful prompts, being able to sift out hallucinations and knowing how to combine this technical know-how with the human qualities which are still so important to our profession (and which cannot be fulfilled by AI) who will be the future legal superstars.

If it has not happened already, it surely cannot be long before law degree courses offer an understanding of AI as part of the curriculum. It may even become as mandatory as contract and tort in the professional examinations. There are, of course, numerous online courses and tutorials giving insight into how LLMs and algorithms work and can be made to work better. Some of you have already signed up… may the force be with you.

Andrew Goddard KC and Laura Hussey consider the new skills barristers will need to become the legal superstars of the future

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

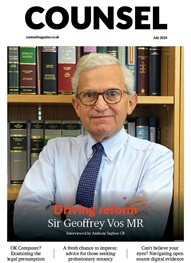

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts