*/

Mia Leslie shares techniques developed by the Public Law Project to help identify whether your client has been affected by automated state decision-making

Increasingly, government and public bodies are using automation, algorithms and AI to make vital decisions in areas such as immigration, welfare, policing, housing and education. These decisions have far-reaching effects on people, so how can we know if these technologies are at play?

Public Law Project (PLP) adopts the term ‘automated decision-making’ (ADM) to refer to the role different technologies play within government decision-making systems and processes. ADM has become a central tool of modern government, helping decision-makers navigate complexity and uncertainty, while maximising limited time and resources. But government departments have not been forthcoming about this use: their embrace of it has been remarkably opaque.

There is no systematic publication of information about how and why public authorities procure, develop and use ADM systems. When individuals receive decision notifications from such authorities, they are not notified about the presence of ADM within the decision-making process or its role in determining the outcome.

This opacity is a concern because it can act as a barrier to scrutiny, hampering the necessary legal analysis and making it harder to understand if the decision made is fair, lawful, and non-discriminatory. For example, it can be difficult to obtain enough information about the interaction between the automated system and the human decision-maker to know whether a human is meaningfully involved in decisions that have a legal or similarly significant effect, to avoid a breach of Article 22(1) of the UK GPDR (see ‘What does the UK GDPR say about automated decision-making and profiling?’, Information Commissioner’s Office).

So, how can practitioners understand, for example, whether the Department for Work and Pensions has used AI to flag their client’s universal credit claim for a fraud investigation? What are the key ‘signs’ and ‘signals’ of where ADM may be present in decisions?

Over the past four years, PLP has blended research and casework methods to build a better picture of where ADM systems exist, how decisions are reached, and who might be affected. While there is still a long way to go to achieving sufficient levels of systemic transparency of ADM, PLP has identified a set of techniques that practitioners can adopt to identify whether a decision affecting their client involves automation. The success of these methods depends on the willingness of public authorities to engage, and the extent to which exemptions under the Freedom of Information Act (FOIA) are relied on.

Sometimes, the identification of automation within a decision-making process requires extensive work. Other times, the basic information may be presented in accessible ways.

PLP has identified the presence of automation in some processes by decoding the use of ambiguous terminology that refers to ‘decision support’ or ‘decision recommendations’ presented to caseworkers. These terms are often buried within casework guidance or in the reports of independent monitoring bodies, such as the Independent Chief Inspector of Borders and Immigration. Other signs may be embedded within descriptions or overviews of decision-making or flow processes, such as reference to claims or cases being ‘flagged’ to caseworkers for review or further investigation – without any reference to who or what does the flagging.

With some other automated systems, the signals for detection can be less conspicuous. In these instances, practitioners might be able to ‘sniff out’ the presence of automation where certain decisions are made by public authorities at a rapid pace. Or they might notice where the number of decisions doesn’t quite compute with the number of staff in the relevant department, and the timeframe in which they ‘made’ the decisions in question.

Outside of the broad attempts to scope out the existence of ADM within decision-making systems and processes by looking into ‘signs’ and ‘signals’, there are some more precise methods that can be applied in the endeavour to identify ADM and possibly assess its impact.

For a systemic overview of a decision-making process, analysis of the relevant Data Protection Impact Assessment (DPIA) can shine a light on the potential risks of a data processing system or project, including whether the processing activity includes automated decision-making with legal or similar significant effect.

If ADM is identified, or even strongly suspected, an analysis of the relevant Equality Impact Assessment (EIA) might confirm its existence. In setting out equality considerations, the public authority might set out where an automated tool sits within the applicable decision-making process, and the impact of the system on the protected characteristics on the individuals processed.

However, there is no general requirement for DPIAs or EIAs to be published and so it is often necessary to request access under the FOIA, the results of which can vary. Requests are sometimes refused, citing reliance on sections of FOIA that exempt information from disclosure, such as where disclosure would prejudice effective law enforcement (s 31). When documents are disclosed swathes of information can be withheld through extensive redactions, limiting the possibility of full understanding of the presence of ADM and its impact.

Subject access requests (SAR) can be a valuable way to top up the more systemic information contained in DPIAs and EIA. Routinely, SARs are submitted to obtain a copy of the client’s personal data as held by the public authority. But they can also be tailored to request information under Article 12 of the General Data Protection Regulation (GDPR) which requires data controllers to take appropriate measures to provide information under Articles 13, 14, 15 and 22.

If fully engaged with by the public authority, such requests can yield information on whether the individual has been subject to a solely automated decision or profiling and, if relevant, which exemption was applied. Or they can show whether any decision support tool has been used when making or informing decisions relating to the outcome in question, which can be particularly useful for addressing the question of whether an automated system is involved in ‘decision-making’ or ‘decision-recommending’.

While possibly helpful for identifying the presence of ADM in a particular decision, submitting a SAR requires the identification of an affected individual, a pre-requisite that opacity can often frustrate in this area. Even if that hurdle is crossed, submission of a SAR requires explicit consent from an affected individual and will ultimately only produce information relevant to their specific case.

By applying a combination of these methods over the last few years, PLP has compiled its findings of ADM tools into a public database, the TAG Register. The register serves as a useful starting point and functional resource for practitioners looking to take the first steps towards identifying ADM and assessing whether its use is fair, lawful and non-discriminatory.

But the onus shouldn’t be on clients, organisations, or practitioners to dig this information out. Transparency around the use of ADM in public decision-making should be government initiated. Or at the very least, straightforwardly available to the individuals affected and those representing them. PLP is continuously working to improve the transparency of both the use of ADM and of the tools themselves as a first step to ensuring public authorities can be properly held to account for their use of ADM.

Increasingly, government and public bodies are using automation, algorithms and AI to make vital decisions in areas such as immigration, welfare, policing, housing and education. These decisions have far-reaching effects on people, so how can we know if these technologies are at play?

Public Law Project (PLP) adopts the term ‘automated decision-making’ (ADM) to refer to the role different technologies play within government decision-making systems and processes. ADM has become a central tool of modern government, helping decision-makers navigate complexity and uncertainty, while maximising limited time and resources. But government departments have not been forthcoming about this use: their embrace of it has been remarkably opaque.

There is no systematic publication of information about how and why public authorities procure, develop and use ADM systems. When individuals receive decision notifications from such authorities, they are not notified about the presence of ADM within the decision-making process or its role in determining the outcome.

This opacity is a concern because it can act as a barrier to scrutiny, hampering the necessary legal analysis and making it harder to understand if the decision made is fair, lawful, and non-discriminatory. For example, it can be difficult to obtain enough information about the interaction between the automated system and the human decision-maker to know whether a human is meaningfully involved in decisions that have a legal or similarly significant effect, to avoid a breach of Article 22(1) of the UK GPDR (see ‘What does the UK GDPR say about automated decision-making and profiling?’, Information Commissioner’s Office).

So, how can practitioners understand, for example, whether the Department for Work and Pensions has used AI to flag their client’s universal credit claim for a fraud investigation? What are the key ‘signs’ and ‘signals’ of where ADM may be present in decisions?

Over the past four years, PLP has blended research and casework methods to build a better picture of where ADM systems exist, how decisions are reached, and who might be affected. While there is still a long way to go to achieving sufficient levels of systemic transparency of ADM, PLP has identified a set of techniques that practitioners can adopt to identify whether a decision affecting their client involves automation. The success of these methods depends on the willingness of public authorities to engage, and the extent to which exemptions under the Freedom of Information Act (FOIA) are relied on.

Sometimes, the identification of automation within a decision-making process requires extensive work. Other times, the basic information may be presented in accessible ways.

PLP has identified the presence of automation in some processes by decoding the use of ambiguous terminology that refers to ‘decision support’ or ‘decision recommendations’ presented to caseworkers. These terms are often buried within casework guidance or in the reports of independent monitoring bodies, such as the Independent Chief Inspector of Borders and Immigration. Other signs may be embedded within descriptions or overviews of decision-making or flow processes, such as reference to claims or cases being ‘flagged’ to caseworkers for review or further investigation – without any reference to who or what does the flagging.

With some other automated systems, the signals for detection can be less conspicuous. In these instances, practitioners might be able to ‘sniff out’ the presence of automation where certain decisions are made by public authorities at a rapid pace. Or they might notice where the number of decisions doesn’t quite compute with the number of staff in the relevant department, and the timeframe in which they ‘made’ the decisions in question.

Outside of the broad attempts to scope out the existence of ADM within decision-making systems and processes by looking into ‘signs’ and ‘signals’, there are some more precise methods that can be applied in the endeavour to identify ADM and possibly assess its impact.

For a systemic overview of a decision-making process, analysis of the relevant Data Protection Impact Assessment (DPIA) can shine a light on the potential risks of a data processing system or project, including whether the processing activity includes automated decision-making with legal or similar significant effect.

If ADM is identified, or even strongly suspected, an analysis of the relevant Equality Impact Assessment (EIA) might confirm its existence. In setting out equality considerations, the public authority might set out where an automated tool sits within the applicable decision-making process, and the impact of the system on the protected characteristics on the individuals processed.

However, there is no general requirement for DPIAs or EIAs to be published and so it is often necessary to request access under the FOIA, the results of which can vary. Requests are sometimes refused, citing reliance on sections of FOIA that exempt information from disclosure, such as where disclosure would prejudice effective law enforcement (s 31). When documents are disclosed swathes of information can be withheld through extensive redactions, limiting the possibility of full understanding of the presence of ADM and its impact.

Subject access requests (SAR) can be a valuable way to top up the more systemic information contained in DPIAs and EIA. Routinely, SARs are submitted to obtain a copy of the client’s personal data as held by the public authority. But they can also be tailored to request information under Article 12 of the General Data Protection Regulation (GDPR) which requires data controllers to take appropriate measures to provide information under Articles 13, 14, 15 and 22.

If fully engaged with by the public authority, such requests can yield information on whether the individual has been subject to a solely automated decision or profiling and, if relevant, which exemption was applied. Or they can show whether any decision support tool has been used when making or informing decisions relating to the outcome in question, which can be particularly useful for addressing the question of whether an automated system is involved in ‘decision-making’ or ‘decision-recommending’.

While possibly helpful for identifying the presence of ADM in a particular decision, submitting a SAR requires the identification of an affected individual, a pre-requisite that opacity can often frustrate in this area. Even if that hurdle is crossed, submission of a SAR requires explicit consent from an affected individual and will ultimately only produce information relevant to their specific case.

By applying a combination of these methods over the last few years, PLP has compiled its findings of ADM tools into a public database, the TAG Register. The register serves as a useful starting point and functional resource for practitioners looking to take the first steps towards identifying ADM and assessing whether its use is fair, lawful and non-discriminatory.

But the onus shouldn’t be on clients, organisations, or practitioners to dig this information out. Transparency around the use of ADM in public decision-making should be government initiated. Or at the very least, straightforwardly available to the individuals affected and those representing them. PLP is continuously working to improve the transparency of both the use of ADM and of the tools themselves as a first step to ensuring public authorities can be properly held to account for their use of ADM.

Mia Leslie shares techniques developed by the Public Law Project to help identify whether your client has been affected by automated state decision-making

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

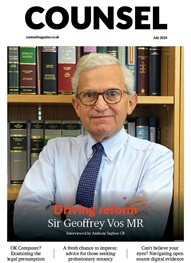

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts