*/

Alex Goodman KC on why our electoral laws need an urgent upgrade – they were not designed to address the corruption of popular opinion by AI and deepfakes

2024 is the first year in history in which over half the human population will have had a chance to cast a vote. Most of those electors will have all been affected by misinformation, deepfakes and artificial intelligence. How might we amend the UK’s electoral laws to anticipate and obviate the threats which these innovations pose?

Democracies have been confronting deepfake influence on elections since at least 2019 when a video was designed to make Nancy Pelosi (Speaker of the US House of Representatives) appear slurred and ill. India has grappled with the use of deepfake technology since 2020 when the BJP promoted a candidate by making him appear to speak fluently in a Hindi dialect (presumably to make him appear more ‘local’). More recently, a deepfake video of an explosion at the Pentagon briefly caused a dip in stock markets. Donald Trump Jr recently shared a deepfake of a CNN Anchor and Ron de Santis posted deepfake videos of Donald Trump embracing Dr Anthony S. Fauci. On 9 October 2023, Sir Keir Starmer became the first senior British politician to find themselves the subject of a ‘deepfake’ audio clip circulating widely on social media, in which he appeared to berate a junior staffer for losing some equipment. Deepfake audio was also disseminated two days before Slovakia’s elections last year. Javier Milei was elected as president of Argentina after an election in which both candidates were reported to have made use of deepfakes and AI, including AI images depicting Mile’s rival as a Chinese Communist leader.

British legislation has long tried to prevent psychological manipulation, deception and corruption around elections. Section 22 of the Bribery at Elections Act 1842 made it a criminal offence of ‘corrupt practice’ of ‘treating’ if he provides ‘any meat, drink, entertainment… for the purpose of corruptly influencing that person … to vote or refrain from voting’. The current s 114 of the Representation of the People Act 1983 is derived from s 22 of the Bribery at Elections Act 1842 and still prohibits such corrupt practices. Section 114A of the 1983 Act prohibits a person from exerting undue influence – a crime that includes ‘placing undue spiritual pressure on a person’ and ‘doing any act designed to deceive a person in relation to the administration of an election’.

Thus, our electoral laws already embody values that an election should be fair and honest and they already strive to eliminate mischiefs that undermine those values. Our laws aim to prohibit the pressuring of people’s spirits, the deceiving of people’ s minds and the corruption of their will. UK electoral law cuts a venerable and reliable balance between free speech, regulation of media and fair election. There is no immediate need to revisit the principles of this balance: embarking on such debates will inevitably result in years of delay (exacerbated by vested interests).

However, UK electoral law is aimed at manipulation techniques and technologies of a different era. Its origins are in the 19th century, and it is concerned mainly with the regulation of comments about candidates and material from political parties. Our electoral laws were not designed to address the corruption of popular opinion by AI and deepfakes.

Consider how something like the scenario from Tracy Chapman’s Across the Lines might be generated or manipulated by bad actors to influence the forthcoming UK elections. A reminder of the lyrics:

‘Little black girl gets assaulted/ Ain’t no reason why/ Newspaper prints the story/ And racist tempers fly/ Next day it starts a riot/ Knives and guns are drawn/ Two black boys get killed/ One white boy goes blind… Choose sides/ Run for your life/ Tonight the riots begin’.

Suppose the reports that sparked the rioting (online, not print), and much of the reporting that follows transpire to have been deepfakes spread rapidly by artificial intelligence across social media to manipulate the talking points of the election. Suppose they manage to tilt the whole narrative arc of the election towards questions about race relations, a stamping ground of the far right. Such manipulation is redolent of the ‘psy-ops’ we may hear about in Russia. It does not align with our idea of a fair election. However, it is difficult to see what tools our regulators might currently use to stop such corruption in its tracks. We need to install some updates.

An immediate fix could be to update existing rules which govern impartiality in broadcasting. Section 92 of the Representation of the People Act 1983 already prohibits attempts to circumvent those rules by broadcasting from abroad. Yet the legislation does not extend its reach to social media platforms. You can sit watching a smart TV, flicking between the regulated BBC and unregulated YouTube. This is anomalous and outdated. There is nothing controversial about suggesting that these innovations in broadcasting should be regulated. We already have laws that attempt to regulate broadcasting to safeguard the fair conduct of elections- it is just that the mechanisms of such regulation are outdated.

The means of enforcement also need an upgrade. Our laws remain based on a 19th century design which sought to prohibit corrupt practices within single geographic constituencies by retrospective criminal punishments and election petitions. But these mechanisms are not fit for redressing threats from AI which are capable of overwhelming whole electorates. The particular problems posed by those who exploit generative AI to create deepfakes are volume and reach. Automated misinformation can be generated at a near-limitless pace and volume. AI is also capable, as the Cambridge Analytica scandal showed, of deploying highly targeted psychological and profiling data and it can learn the best way to reach its target audience.

There is a need for bodies with powers to act at great speed to halt the spread of misinformation and deepfakes. The Electoral Commission, Ofcom, the Information Commissioner or other existing regulators might be given enhanced powers and resources. However, it is probably better that a new specialist body with technical understanding and expertise is established since there may be complex specialist issues involving the navigation of both commercial confidentiality and – as with Cambridge Analytica – unlawful misuse of personal data. The Independent Panel on Terrorism might offer a model that could allow judicial oversight of confidential material – for the inspection of matters like AI tools, example outputs and data about the use of these tools.

In the longer run, we need a means of bringing liability to bear on social media platforms to halt the dissemination of misinformation or deepfakes. The panoply of public regulators of equality, finance, telecoms and competition might have their remits expanded. The Equality and Human Rights Commission for example should be resourced on an ongoing basis to combat racial and other biases in AI. The Information Commissioner, the Police and other agencies all need to be empowered and resourced to move speedily. In the meantime, the effectiveness of the (Big Tech) ‘Tech Accord’ for the 2024 elections will be informative.

There is a small window of opportunity for the UK to take a global lead in trying to safeguard our democracies. If it doesn’t, we face a risk that governments across the world, themselves elected on the back of deepfakes, AI and misinformation will lose the legitimacy to redress the threat.

Tory and Whig agents, both attempting to bribe an innkeeper to vote for them in Canvassing for Votes from William Hogarth’s The Humours of an Election series, 1755.

2024 is the first year in history in which over half the human population will have had a chance to cast a vote. Most of those electors will have all been affected by misinformation, deepfakes and artificial intelligence. How might we amend the UK’s electoral laws to anticipate and obviate the threats which these innovations pose?

Democracies have been confronting deepfake influence on elections since at least 2019 when a video was designed to make Nancy Pelosi (Speaker of the US House of Representatives) appear slurred and ill. India has grappled with the use of deepfake technology since 2020 when the BJP promoted a candidate by making him appear to speak fluently in a Hindi dialect (presumably to make him appear more ‘local’). More recently, a deepfake video of an explosion at the Pentagon briefly caused a dip in stock markets. Donald Trump Jr recently shared a deepfake of a CNN Anchor and Ron de Santis posted deepfake videos of Donald Trump embracing Dr Anthony S. Fauci. On 9 October 2023, Sir Keir Starmer became the first senior British politician to find themselves the subject of a ‘deepfake’ audio clip circulating widely on social media, in which he appeared to berate a junior staffer for losing some equipment. Deepfake audio was also disseminated two days before Slovakia’s elections last year. Javier Milei was elected as president of Argentina after an election in which both candidates were reported to have made use of deepfakes and AI, including AI images depicting Mile’s rival as a Chinese Communist leader.

British legislation has long tried to prevent psychological manipulation, deception and corruption around elections. Section 22 of the Bribery at Elections Act 1842 made it a criminal offence of ‘corrupt practice’ of ‘treating’ if he provides ‘any meat, drink, entertainment… for the purpose of corruptly influencing that person … to vote or refrain from voting’. The current s 114 of the Representation of the People Act 1983 is derived from s 22 of the Bribery at Elections Act 1842 and still prohibits such corrupt practices. Section 114A of the 1983 Act prohibits a person from exerting undue influence – a crime that includes ‘placing undue spiritual pressure on a person’ and ‘doing any act designed to deceive a person in relation to the administration of an election’.

Thus, our electoral laws already embody values that an election should be fair and honest and they already strive to eliminate mischiefs that undermine those values. Our laws aim to prohibit the pressuring of people’s spirits, the deceiving of people’ s minds and the corruption of their will. UK electoral law cuts a venerable and reliable balance between free speech, regulation of media and fair election. There is no immediate need to revisit the principles of this balance: embarking on such debates will inevitably result in years of delay (exacerbated by vested interests).

However, UK electoral law is aimed at manipulation techniques and technologies of a different era. Its origins are in the 19th century, and it is concerned mainly with the regulation of comments about candidates and material from political parties. Our electoral laws were not designed to address the corruption of popular opinion by AI and deepfakes.

Consider how something like the scenario from Tracy Chapman’s Across the Lines might be generated or manipulated by bad actors to influence the forthcoming UK elections. A reminder of the lyrics:

‘Little black girl gets assaulted/ Ain’t no reason why/ Newspaper prints the story/ And racist tempers fly/ Next day it starts a riot/ Knives and guns are drawn/ Two black boys get killed/ One white boy goes blind… Choose sides/ Run for your life/ Tonight the riots begin’.

Suppose the reports that sparked the rioting (online, not print), and much of the reporting that follows transpire to have been deepfakes spread rapidly by artificial intelligence across social media to manipulate the talking points of the election. Suppose they manage to tilt the whole narrative arc of the election towards questions about race relations, a stamping ground of the far right. Such manipulation is redolent of the ‘psy-ops’ we may hear about in Russia. It does not align with our idea of a fair election. However, it is difficult to see what tools our regulators might currently use to stop such corruption in its tracks. We need to install some updates.

An immediate fix could be to update existing rules which govern impartiality in broadcasting. Section 92 of the Representation of the People Act 1983 already prohibits attempts to circumvent those rules by broadcasting from abroad. Yet the legislation does not extend its reach to social media platforms. You can sit watching a smart TV, flicking between the regulated BBC and unregulated YouTube. This is anomalous and outdated. There is nothing controversial about suggesting that these innovations in broadcasting should be regulated. We already have laws that attempt to regulate broadcasting to safeguard the fair conduct of elections- it is just that the mechanisms of such regulation are outdated.

The means of enforcement also need an upgrade. Our laws remain based on a 19th century design which sought to prohibit corrupt practices within single geographic constituencies by retrospective criminal punishments and election petitions. But these mechanisms are not fit for redressing threats from AI which are capable of overwhelming whole electorates. The particular problems posed by those who exploit generative AI to create deepfakes are volume and reach. Automated misinformation can be generated at a near-limitless pace and volume. AI is also capable, as the Cambridge Analytica scandal showed, of deploying highly targeted psychological and profiling data and it can learn the best way to reach its target audience.

There is a need for bodies with powers to act at great speed to halt the spread of misinformation and deepfakes. The Electoral Commission, Ofcom, the Information Commissioner or other existing regulators might be given enhanced powers and resources. However, it is probably better that a new specialist body with technical understanding and expertise is established since there may be complex specialist issues involving the navigation of both commercial confidentiality and – as with Cambridge Analytica – unlawful misuse of personal data. The Independent Panel on Terrorism might offer a model that could allow judicial oversight of confidential material – for the inspection of matters like AI tools, example outputs and data about the use of these tools.

In the longer run, we need a means of bringing liability to bear on social media platforms to halt the dissemination of misinformation or deepfakes. The panoply of public regulators of equality, finance, telecoms and competition might have their remits expanded. The Equality and Human Rights Commission for example should be resourced on an ongoing basis to combat racial and other biases in AI. The Information Commissioner, the Police and other agencies all need to be empowered and resourced to move speedily. In the meantime, the effectiveness of the (Big Tech) ‘Tech Accord’ for the 2024 elections will be informative.

There is a small window of opportunity for the UK to take a global lead in trying to safeguard our democracies. If it doesn’t, we face a risk that governments across the world, themselves elected on the back of deepfakes, AI and misinformation will lose the legitimacy to redress the threat.

Tory and Whig agents, both attempting to bribe an innkeeper to vote for them in Canvassing for Votes from William Hogarth’s The Humours of an Election series, 1755.

Alex Goodman KC on why our electoral laws need an urgent upgrade – they were not designed to address the corruption of popular opinion by AI and deepfakes

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

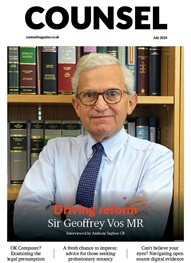

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts