*/

Deepfakes are much in the news these days. What exactly are they? A deepfake is content (video, audio or otherwise) that is wholly or partially fabricated, or existing content that has been manipulated. While textual material, such as that generated by OpenAI’s GPT-3 large language model, might be regarded as a ‘deepfake’, in popular parlance it usually refers to images or videos, such as: the viral impersonation of actor Tom Cruise on TikTok; Buzzfeed’s Barack Obama deepfake, where the former president seems to engage in uncharacteristic profanity; and more recently, deepfake videos of Putin and Zelensky which have appeared as propaganda during the war in Ukraine.

Of course, it has been possible for decades to fake or alter images using simple photo-retouching or programs like Photoshop. However, machine learning (ML) technologies have revolutionised the process. The first key development was Generative Adversarial Networks (GANs) which massively improved the quality and resolution of material produced, and reduced costs and time to produce. Very recently, a new type of algorithm known as ‘diffusion’ has produced even better results and enabled a wave of new ‘large image models’ which generate almost surreally convincing artwork and imagery.

Despite some harmless entertainment value, deepfakes in fact primarily have more chilling uses. Some 96% of deepfakes are fake pornography, usually non-consensual and typically designed to humiliate, harm, or seek financial gain (see Automating Image Abuse, Sensity 2020 and Bobby Chesney and Danielle Citron, ‘Deep Fakes: A Looming Challenge for Privacy, Democracy, and National Security’, (2019) 107 California Law Review, 1753). Deepfakes have also become notorious as a form of political ‘fake news’ which created considerable worry in the run up to the last US election. Key to the dangers of deepfakes has been the ease of making and disseminating them via simple apps like DeepNude, online tutorial help and social media platforms.

Three years ago, I suggested in a lecture for the Alan Turing Institute that although political deepfakes grab the headlines, deepfaked evidence in quite low-level legal cases – such as parking appeals, insurance claims or family tussles – might become a problem very quickly. We are now at the beginning of living in that future.

Much of what is written has concentrated on video evidence but audio may be a frontrunner growth area. In 2019, a lawyer acting in a UK custody dispute for the father resident in Dubai successfully challenged audio evidence apparently portraying the father as violent and aggressive. By gaining access to the audio files, forensic experts were able to point to the recording being a ‘deepfake’, which the mother had put together using online help fora. Audio evidence is still relatively quick and easy to fake convincingly compared to video and photos and high-quality mass-market apps are already freely available to create ‘voice clones’. Videos variously claim that a voice can be cloned using only five minutes of recorded speech or even five seconds. Combine this tech with apps like Google Duplex designed to let you have an AI make calls for you, and audio deception becomes extremely simple. Audio deepfake detection is also advancing; however, it probably lags behind image detection (see Almutairi, Zaynab and Hebah Elgibreen, 2022. ‘A Review of Modern Audio Deepfake Detection Methods: Challenges and Future Directions’ Algorithms 15, no. 5: 155).

Family law is not the only area where audio evidence may be crucial. Criminal fraud is likely to be another important area. Symantec reported to the BBC in 2019 that they had seen three cases of seemingly deepfaked audio of different chief executives used to trick senior financial controllers into transferring cash. CNN has predicted that robo-calling is likely to be combined with voice-spoofing to persuade consumers to part with cash and give away passwords. Apps can also erase ‘suspect’ accents (such as Indian or Filipino) replacing them with anglophone tones. A security researcher has recently demonstrated how deepfake video can bypass banking authentication systems.

How can or will courts deal with authentication of evidence in the deepfake era? In the US, historically the courts have required authentication of videos entered in evidence, but it has been set at a very low level (see: Riana Pfefferkorn ‘‘Deepfakes’ in the courtroom’ 2020 Public Interest Law Journal 245). However, this may be about to change; a defence lawyer in court following the 6 January 2021 attack on the US Capitol after Trump’s election defeat, suggested that given advances in deepfakes, incriminating publicly posted YouTube videos cited by the prosecution, should not be regarded as authentic without proof of no alteration or a consistent chain of custody of the video. In the UK, similarly, video and audio are not usually excluded as hearsay, and in civil litigation in any case the hearsay rule has been abolished for documentary evidence (including images). However, in criminal cases, a provision under PACE requiring certification of computer evidence was abolished in 1999 and replaced by the common law presumption that a computer operates correctly in producing electronic evidence. This provision has become controversial, especially since the Post Office postmasters’ scandal, but its potentially important application to deepfake evidence has been little discussed. It is possible it sets up a presumption that video evidence is legitimate even where a deepfake is suspected (see Daniel Seng and Stephen Mason ‘Artificial Intelligence and Evidence’ (2021) 33 SaCLJ 241 at 269).

Much technical work has been done on trying to create digital images which prove their own authenticity by ‘provenance’ techniques (eg the mobile app Truepic stamps a digital watermark on every picture taken with that mobile device’s camera), but these cannot be very effective without creating an entire mandatory hardware and software ecology for digital cameras and editing apps, which seems highly implausible. ‘[T]he general concept of a ‘self-authenticating’ image and how it might be used in a court of law is still something which needs to be evaluated’ said the House of Lords foresightfully in 1998 (1998 HL Science and Technology Committee, 5th Report Digital Images as Evidence). Detection without watermarks is possible, and ML tools exist to help (eg DeepTrace) but remains an arms race; classically, as soon as the lack of realistic eye-blinking was noted in facial deepfakes, more realistic eye-blinking was incorporated into the apps.

Law is the other source of solutions. A 2021 Dutch report (Tilburg University) argues that defendants or litigants themselves, especially the vulnerable, cannot simply be left to challenge possibly deep faked evidence, given the potential impact on the justice system. Instead, they suggest that duties of care might be variably imposed on lawyers, police, prosecutor and even judges to have all evidence submitted verified by an independent forensic expert. All these options would be expensive and take up time, especially given a shortage of relevant experts (a national centre of expertise might help, they suggest). Finally, they suggest that sanctions for introducing false evidence could be beefed up or enforced more stringently.

Deepfake evidence cannot be ignored as a passing novelty. The likely trends are that large language and image models used to generate deepfakes will grow in size and sophistication and that open source, open access models may become a norm, allowing still greater tinkering by malicious users. Platforms may try to remove and block access to deepfake apps, models and training videos, but are unlikely to succeed. Manual detection by lawyers, judges, jurors and clients will become increasingly difficult. The Royal Society found in January 2022 that the vast majority of people struggle to identify deepfakes from genuine videos without a warning label. Using two professionally produced deepfake videos (the Obama and the Tom Cruise cited above) they discovered that most people (78.4%) could not distinguish the deepfake from authentic content. Worse still, public awareness of deepfakes, often touted as an educational ‘silver bullet’ might lead to a tactic of discrediting genuine evidence as potentially fake (a ‘liar’s dividend’), leaving the ‘reasonable doubt’ threshold of criminal law damaged beyond repair.

The US expert Riana Pfefferkorn suggests lawyers prepare for deepfake evidence by budgeting for digital forensic experts and witnesses and swotting up on deepfake technology sufficiently to be able to spot outward signs that the evidence has been tampered with (‘Courts and lawyers struggle with growing prevalence of deepfakes’, ABA Journal). Ethical concerns may arise if a client is pushing their lawyer too hard to use certain evidence. ‘If it’s a smoking gun that seems too good to be true, maybe it is.’

Deepfakes are much in the news these days. What exactly are they? A deepfake is content (video, audio or otherwise) that is wholly or partially fabricated, or existing content that has been manipulated. While textual material, such as that generated by OpenAI’s GPT-3 large language model, might be regarded as a ‘deepfake’, in popular parlance it usually refers to images or videos, such as: the viral impersonation of actor Tom Cruise on TikTok; Buzzfeed’s Barack Obama deepfake, where the former president seems to engage in uncharacteristic profanity; and more recently, deepfake videos of Putin and Zelensky which have appeared as propaganda during the war in Ukraine.

Of course, it has been possible for decades to fake or alter images using simple photo-retouching or programs like Photoshop. However, machine learning (ML) technologies have revolutionised the process. The first key development was Generative Adversarial Networks (GANs) which massively improved the quality and resolution of material produced, and reduced costs and time to produce. Very recently, a new type of algorithm known as ‘diffusion’ has produced even better results and enabled a wave of new ‘large image models’ which generate almost surreally convincing artwork and imagery.

Despite some harmless entertainment value, deepfakes in fact primarily have more chilling uses. Some 96% of deepfakes are fake pornography, usually non-consensual and typically designed to humiliate, harm, or seek financial gain (see Automating Image Abuse, Sensity 2020 and Bobby Chesney and Danielle Citron, ‘Deep Fakes: A Looming Challenge for Privacy, Democracy, and National Security’, (2019) 107 California Law Review, 1753). Deepfakes have also become notorious as a form of political ‘fake news’ which created considerable worry in the run up to the last US election. Key to the dangers of deepfakes has been the ease of making and disseminating them via simple apps like DeepNude, online tutorial help and social media platforms.

Three years ago, I suggested in a lecture for the Alan Turing Institute that although political deepfakes grab the headlines, deepfaked evidence in quite low-level legal cases – such as parking appeals, insurance claims or family tussles – might become a problem very quickly. We are now at the beginning of living in that future.

Much of what is written has concentrated on video evidence but audio may be a frontrunner growth area. In 2019, a lawyer acting in a UK custody dispute for the father resident in Dubai successfully challenged audio evidence apparently portraying the father as violent and aggressive. By gaining access to the audio files, forensic experts were able to point to the recording being a ‘deepfake’, which the mother had put together using online help fora. Audio evidence is still relatively quick and easy to fake convincingly compared to video and photos and high-quality mass-market apps are already freely available to create ‘voice clones’. Videos variously claim that a voice can be cloned using only five minutes of recorded speech or even five seconds. Combine this tech with apps like Google Duplex designed to let you have an AI make calls for you, and audio deception becomes extremely simple. Audio deepfake detection is also advancing; however, it probably lags behind image detection (see Almutairi, Zaynab and Hebah Elgibreen, 2022. ‘A Review of Modern Audio Deepfake Detection Methods: Challenges and Future Directions’ Algorithms 15, no. 5: 155).

Family law is not the only area where audio evidence may be crucial. Criminal fraud is likely to be another important area. Symantec reported to the BBC in 2019 that they had seen three cases of seemingly deepfaked audio of different chief executives used to trick senior financial controllers into transferring cash. CNN has predicted that robo-calling is likely to be combined with voice-spoofing to persuade consumers to part with cash and give away passwords. Apps can also erase ‘suspect’ accents (such as Indian or Filipino) replacing them with anglophone tones. A security researcher has recently demonstrated how deepfake video can bypass banking authentication systems.

How can or will courts deal with authentication of evidence in the deepfake era? In the US, historically the courts have required authentication of videos entered in evidence, but it has been set at a very low level (see: Riana Pfefferkorn ‘‘Deepfakes’ in the courtroom’ 2020 Public Interest Law Journal 245). However, this may be about to change; a defence lawyer in court following the 6 January 2021 attack on the US Capitol after Trump’s election defeat, suggested that given advances in deepfakes, incriminating publicly posted YouTube videos cited by the prosecution, should not be regarded as authentic without proof of no alteration or a consistent chain of custody of the video. In the UK, similarly, video and audio are not usually excluded as hearsay, and in civil litigation in any case the hearsay rule has been abolished for documentary evidence (including images). However, in criminal cases, a provision under PACE requiring certification of computer evidence was abolished in 1999 and replaced by the common law presumption that a computer operates correctly in producing electronic evidence. This provision has become controversial, especially since the Post Office postmasters’ scandal, but its potentially important application to deepfake evidence has been little discussed. It is possible it sets up a presumption that video evidence is legitimate even where a deepfake is suspected (see Daniel Seng and Stephen Mason ‘Artificial Intelligence and Evidence’ (2021) 33 SaCLJ 241 at 269).

Much technical work has been done on trying to create digital images which prove their own authenticity by ‘provenance’ techniques (eg the mobile app Truepic stamps a digital watermark on every picture taken with that mobile device’s camera), but these cannot be very effective without creating an entire mandatory hardware and software ecology for digital cameras and editing apps, which seems highly implausible. ‘[T]he general concept of a ‘self-authenticating’ image and how it might be used in a court of law is still something which needs to be evaluated’ said the House of Lords foresightfully in 1998 (1998 HL Science and Technology Committee, 5th Report Digital Images as Evidence). Detection without watermarks is possible, and ML tools exist to help (eg DeepTrace) but remains an arms race; classically, as soon as the lack of realistic eye-blinking was noted in facial deepfakes, more realistic eye-blinking was incorporated into the apps.

Law is the other source of solutions. A 2021 Dutch report (Tilburg University) argues that defendants or litigants themselves, especially the vulnerable, cannot simply be left to challenge possibly deep faked evidence, given the potential impact on the justice system. Instead, they suggest that duties of care might be variably imposed on lawyers, police, prosecutor and even judges to have all evidence submitted verified by an independent forensic expert. All these options would be expensive and take up time, especially given a shortage of relevant experts (a national centre of expertise might help, they suggest). Finally, they suggest that sanctions for introducing false evidence could be beefed up or enforced more stringently.

Deepfake evidence cannot be ignored as a passing novelty. The likely trends are that large language and image models used to generate deepfakes will grow in size and sophistication and that open source, open access models may become a norm, allowing still greater tinkering by malicious users. Platforms may try to remove and block access to deepfake apps, models and training videos, but are unlikely to succeed. Manual detection by lawyers, judges, jurors and clients will become increasingly difficult. The Royal Society found in January 2022 that the vast majority of people struggle to identify deepfakes from genuine videos without a warning label. Using two professionally produced deepfake videos (the Obama and the Tom Cruise cited above) they discovered that most people (78.4%) could not distinguish the deepfake from authentic content. Worse still, public awareness of deepfakes, often touted as an educational ‘silver bullet’ might lead to a tactic of discrediting genuine evidence as potentially fake (a ‘liar’s dividend’), leaving the ‘reasonable doubt’ threshold of criminal law damaged beyond repair.

The US expert Riana Pfefferkorn suggests lawyers prepare for deepfake evidence by budgeting for digital forensic experts and witnesses and swotting up on deepfake technology sufficiently to be able to spot outward signs that the evidence has been tampered with (‘Courts and lawyers struggle with growing prevalence of deepfakes’, ABA Journal). Ethical concerns may arise if a client is pushing their lawyer too hard to use certain evidence. ‘If it’s a smoking gun that seems too good to be true, maybe it is.’

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

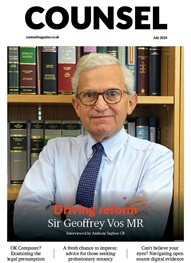

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts