*/

The reluctance of governments to set agreed universal standards will inevitably mean that regulators have to fill the gap, says Sara Ibrahim. How are UK regulators preparing and what are the issues facing practitioners?

Debate around artificial intelligence (AI) and the urgent need to regulate its development and use, appeared to reach a zenith at the time of the UK government’s Bletchley AI summit in November 2023. The desire for western governments to appear quick to react to the dangers of AI was typified by the American Vice President announcing a new US executive order on the ‘Safe, Secure, and Trustworthy Development and Use of AI’ on the eve of the conference.

Since then, the fanfare over AI regulation seems to have petered out. The US government’s own announcement at the end of January this year on key national and federal AI actions since the executive order received little by the way of recognition. Similarly, the announcement the same month of the publication of the final text of the EU Artificial Intelligence Act (‘AIA’) received a muted greeting. This is perhaps unsurprising when the AIA was formally proposed in April 2021, two years ago. It would be unwise to presume that this means AI regulation will not be meaningfully enacted. The reluctance of governments to set agreed universal standards will inevitably mean that regulators have to fill the gap.

The UK government, in its response to its consultation on its AI White Paper, gave regulators until 30 April to set out their strategic approach to AI, with a potential prize of a share of £10 million allocated from public funds for regulators to develop appropriate tools to respond to AI. This means that practitioners will need to be wary of overlapping and potentially conflicting regulatory frameworks, either in cross border matters or where there is more than one potential regulator. There is the additional wrinkle that a number of the government’s deadlines in their consultation response extend into 2024 and this could be delayed by a general election, and ultimately, a new administration.

Regulation is required to meet existing harms that present in new guises or in a more pronounced form. One such case is the use of AI, and specifically generative AI, to produce misleading information at speed that can include text and images (such as websites). This could lead to new cases of fraud against both consumers and even companies. Another example of an old problem in a new guise, are instances of bias and discrimination which could breach provisions of the Equality Act (2010) as well as principles of data accuracy (required by the UK General Data Protection Regulation).

There is a range of views about the need for new regulation for AI. Some instances of AI harm are so serious that they demand immediate intervention, such as use of apps on phones leading to widespread depression and even suicidal intention among children and young people. This must be weighed against the real societal advantages to the use of AI. In 2023 a United Nations policy brief opined that AI could assist in combatting intractable issues such as modern slavery and other human rights abuses. The policy brief suggested that the appropriate use of AI would enable companies to cooperate across jurisdictions to ensure the integrity of supply chains: a problem which has hampered previous efforts to stamp out modern slavery.

Further, it would be remiss not to acknowledge the desire of governments to create a nurturing environment for tech and AI creators. These views are likely to change over time, as the appetite for greater or lesser regulation can go through cycles as was evident in Singapore in the government’s attitude to the regulation of crypto currencies, when it introduced the Payment Services Act. The current UK government appears minded to create a clement investment environment for AI and technology use. However, this might change if there is a further shift in AI capabilities that creates panic about the harms inherent in new technologies.

The presumption has been that AI will be relevant to a small number of technology related sectors and startups. This is overly simplistic given the enthusiasm of some for the use of AI and generative AI like ChatGPT, to minimise costs and increase productivity. Further, the opaque nature of new AI tools to provide hyper specialised services has meant many have failed to notice the potentially pernicious outcomes.

The Competition and Markets Authority (CMA) has been keen to protect consumers from such impacts. In its 2021 paper on algorithmic pricing, the CMA identified that personalised prices used by companies such as Uber in 2016 identified that potential riders were more likely to pay higher ‘surge’ prices if their phones were almost out of battery. The CMA’s response to the government white paper on AI focused on the general duty of traders not to trade unfairly and that this included compliance with equality and data protection law. It went as far as to warn traders that: ‘Indeed, in preparation for potential regulatory intervention, we suggest it is incumbent upon companies to keep records explaining their algorithmic systems, including ensuring that more complex algorithms are explainable.’ This will fundamentally shift the burden to companies using algorithms to explain their use of AI and ensure transparency rather than relying on the ‘black box’ (i.e. the principle that the method or processes used by AI are unfathomable).

Not all regulators appear to believe that a rehaul of approach is needed. The Financial Conduct Authority (with the Bank of England) produced a report on AI and machine learning in 2022 which suggested that the key focus should be regulatory alignment and suggested that the existing governance structures were sufficient to deal with any issues thrown up by AI. It will be interesting to see how regulators approach the introduction of the new Consumer Duty, especially as all products and services will be captured from 31 July 2024 onwards. The current time might therefore be considered a ‘grace period’ where the regulators allow for further preparation to meet the new duty.

In order to enforce their existing regulatory powers in an AI context, some regulators have recruited teams of specialists who will be able to scrutinise the AI and how it has been used. CMA has a new team which includes data scientists, data engineers, technologists, foresight specialists, and behavioural scientists. A high level engagement with AI and how it works will be needed to satisfy regulators with access to experts.

I was very struck at an industry event, where a general counsel with a large international presence said the company aspired to offer the same experience to employees wherever they are in the world. This lofty ambition while credible could create real headaches for lawyers. Even where a client might be located in one jurisdiction, the AI provider (or data processing) may occur in another jurisdiction, or even multiple jurisdictions. It is hard to see how larger companies will be able to avoid having to deal with a multitude of overlapping and even conflicting regulatory systems. Compliance will typically have to be with the highest level of regulation rather than risk being non-compliant.

Furthermore, the uncertainty around the use of AI means that lawyers will have to be aware of developments in how regulators deploy their powers. There might be a temptation on the part of regulators to make examples of technology behemoths such as: Google, Amazon, Apple, Meta and Microsoft. Those outside these five could still be of greater interest to regulators, especially where there are fundamental issues over surveillance or monitoring (typically in the workplace) or in the instances of automated decision-making. Almost all regulators will want to see evidence of ‘humans in the loop’ in the decision-making process and in the rush to save time and money, this is where clients risk falling down.

Debate around artificial intelligence (AI) and the urgent need to regulate its development and use, appeared to reach a zenith at the time of the UK government’s Bletchley AI summit in November 2023. The desire for western governments to appear quick to react to the dangers of AI was typified by the American Vice President announcing a new US executive order on the ‘Safe, Secure, and Trustworthy Development and Use of AI’ on the eve of the conference.

Since then, the fanfare over AI regulation seems to have petered out. The US government’s own announcement at the end of January this year on key national and federal AI actions since the executive order received little by the way of recognition. Similarly, the announcement the same month of the publication of the final text of the EU Artificial Intelligence Act (‘AIA’) received a muted greeting. This is perhaps unsurprising when the AIA was formally proposed in April 2021, two years ago. It would be unwise to presume that this means AI regulation will not be meaningfully enacted. The reluctance of governments to set agreed universal standards will inevitably mean that regulators have to fill the gap.

The UK government, in its response to its consultation on its AI White Paper, gave regulators until 30 April to set out their strategic approach to AI, with a potential prize of a share of £10 million allocated from public funds for regulators to develop appropriate tools to respond to AI. This means that practitioners will need to be wary of overlapping and potentially conflicting regulatory frameworks, either in cross border matters or where there is more than one potential regulator. There is the additional wrinkle that a number of the government’s deadlines in their consultation response extend into 2024 and this could be delayed by a general election, and ultimately, a new administration.

Regulation is required to meet existing harms that present in new guises or in a more pronounced form. One such case is the use of AI, and specifically generative AI, to produce misleading information at speed that can include text and images (such as websites). This could lead to new cases of fraud against both consumers and even companies. Another example of an old problem in a new guise, are instances of bias and discrimination which could breach provisions of the Equality Act (2010) as well as principles of data accuracy (required by the UK General Data Protection Regulation).

There is a range of views about the need for new regulation for AI. Some instances of AI harm are so serious that they demand immediate intervention, such as use of apps on phones leading to widespread depression and even suicidal intention among children and young people. This must be weighed against the real societal advantages to the use of AI. In 2023 a United Nations policy brief opined that AI could assist in combatting intractable issues such as modern slavery and other human rights abuses. The policy brief suggested that the appropriate use of AI would enable companies to cooperate across jurisdictions to ensure the integrity of supply chains: a problem which has hampered previous efforts to stamp out modern slavery.

Further, it would be remiss not to acknowledge the desire of governments to create a nurturing environment for tech and AI creators. These views are likely to change over time, as the appetite for greater or lesser regulation can go through cycles as was evident in Singapore in the government’s attitude to the regulation of crypto currencies, when it introduced the Payment Services Act. The current UK government appears minded to create a clement investment environment for AI and technology use. However, this might change if there is a further shift in AI capabilities that creates panic about the harms inherent in new technologies.

The presumption has been that AI will be relevant to a small number of technology related sectors and startups. This is overly simplistic given the enthusiasm of some for the use of AI and generative AI like ChatGPT, to minimise costs and increase productivity. Further, the opaque nature of new AI tools to provide hyper specialised services has meant many have failed to notice the potentially pernicious outcomes.

The Competition and Markets Authority (CMA) has been keen to protect consumers from such impacts. In its 2021 paper on algorithmic pricing, the CMA identified that personalised prices used by companies such as Uber in 2016 identified that potential riders were more likely to pay higher ‘surge’ prices if their phones were almost out of battery. The CMA’s response to the government white paper on AI focused on the general duty of traders not to trade unfairly and that this included compliance with equality and data protection law. It went as far as to warn traders that: ‘Indeed, in preparation for potential regulatory intervention, we suggest it is incumbent upon companies to keep records explaining their algorithmic systems, including ensuring that more complex algorithms are explainable.’ This will fundamentally shift the burden to companies using algorithms to explain their use of AI and ensure transparency rather than relying on the ‘black box’ (i.e. the principle that the method or processes used by AI are unfathomable).

Not all regulators appear to believe that a rehaul of approach is needed. The Financial Conduct Authority (with the Bank of England) produced a report on AI and machine learning in 2022 which suggested that the key focus should be regulatory alignment and suggested that the existing governance structures were sufficient to deal with any issues thrown up by AI. It will be interesting to see how regulators approach the introduction of the new Consumer Duty, especially as all products and services will be captured from 31 July 2024 onwards. The current time might therefore be considered a ‘grace period’ where the regulators allow for further preparation to meet the new duty.

In order to enforce their existing regulatory powers in an AI context, some regulators have recruited teams of specialists who will be able to scrutinise the AI and how it has been used. CMA has a new team which includes data scientists, data engineers, technologists, foresight specialists, and behavioural scientists. A high level engagement with AI and how it works will be needed to satisfy regulators with access to experts.

I was very struck at an industry event, where a general counsel with a large international presence said the company aspired to offer the same experience to employees wherever they are in the world. This lofty ambition while credible could create real headaches for lawyers. Even where a client might be located in one jurisdiction, the AI provider (or data processing) may occur in another jurisdiction, or even multiple jurisdictions. It is hard to see how larger companies will be able to avoid having to deal with a multitude of overlapping and even conflicting regulatory systems. Compliance will typically have to be with the highest level of regulation rather than risk being non-compliant.

Furthermore, the uncertainty around the use of AI means that lawyers will have to be aware of developments in how regulators deploy their powers. There might be a temptation on the part of regulators to make examples of technology behemoths such as: Google, Amazon, Apple, Meta and Microsoft. Those outside these five could still be of greater interest to regulators, especially where there are fundamental issues over surveillance or monitoring (typically in the workplace) or in the instances of automated decision-making. Almost all regulators will want to see evidence of ‘humans in the loop’ in the decision-making process and in the rush to save time and money, this is where clients risk falling down.

The reluctance of governments to set agreed universal standards will inevitably mean that regulators have to fill the gap, says Sara Ibrahim. How are UK regulators preparing and what are the issues facing practitioners?

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

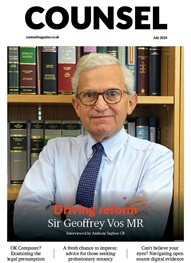

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts