*/

Crowdsourcing court decisions? Will it ever take over from the pedigree and reputation of the Bar? By Simon Gittins

Within ‘legal’, an industry sector bigger than defence that could still – it appears – quite happily run on paper alone, technologies like artificial intelligence (AI) and cloud computing have finally found a permanent home.

With a blanket exception on EIS and SEIS in legal for example (tax relief for investors applicable to just about all other industries), few applicable government grants, disparate often copyrighted data (case law for example) and rigid verticals (I am an ‘X’ lawyer), the economics of even trying to solve big problems in law are difficult.

(Enter stage left) ‘crowdsourcing’, a portmanteau of ‘crowd’ and ‘outsourcing’ first coined in a 2005 Wired magazine article and perhaps best known as the method behind an online resource which enabled this sentence, at least in part – Wikipedia. A team of US academics produced a white paper in December 2017 entitled Crowdsourcing accurately and robustly predicts decisions of the Supreme Court (Katz, Bommerito and Blackman). But can we rely on crowdsourcing and how could it take over from the pedigree and reputation of the Bar?

Let’s jump straight in: think ‘Fantasy Football’. An online competition was set-up with a prize of ten thousand dollars and anyone could participate. Each predicts in a number of US Supreme Court decisions how each justice will vote. They can only do that before the decision and they can change their minds before the final result. If they are right more often than others they win the competition. Of course, no one cares about the competition, it’s just a mechanism to engage a sample set of users. The result is that over 80% of the court decisions of the Supreme Court were correctly predicted by the crowd.

Is 80% a significant number? Yes it is. Companies such as Premonition are already using ‘big data’ to predict counsel’s performance from case law and have published the names of barristers with 100% success rates in the higher courts (Lawyers by Win Rate). Will your barrister be chosen on statistics and can we now put legal cases to the popular vote? That answer depends on what exactly happened and what its potential application might be.

The paper says that the participants will exhibit some ‘selection biases’ although they come from a wide range of varied backgrounds. The website hosting the ‘FantasySOCTUS’ league gives an indication that its followers are ‘thousands of attorneys, law students, and other avid Supreme Court followers'. Indeed there are fantasy leagues at various US law schools but with just one case decided so far in 2018 (at time of writing) – everything is still to play for! (If anyone sets up a UK Supreme Court league – let me know.)

All in all, 7,284 participants took part. If you multiply 7,284 by the 425 cases studied with ten justices, you get over 30 million. In fact, the reason there are 600,000 actual data points is largely down to participants joining and leaving the competition. The average engagement level was that during each term (for ‘term’ read ‘year’) say in 2011 out of 1,336 participants, there were 82 cases, but each – on average – only decided seven. Some participants changed their minds on a justice’s decision many times, some never, but on average each changed their minds at least once. Analytically, you may be worried by this but you shouldn’t be. This is, as the paper states, a true measure of crowdsourcing generally as opposed to the performance of single ‘set’ crowd.

What we care about of course is court decisions. In the study, whilst the justice level decisions are called ‘predictions’, the case level decisions were limited to cases which strictly were able to ‘uphold’ or ‘reverse’ the lower court’s decision.

If you’ve challenged expert statistics before you might inherently feel that there is some in-built baseline and that cases aren’t just ‘half upheld’ and ‘half reversed’ (those which didn’t fit this binary analysis, for example if they settled, were excluded). And you’d be right. At a case level this is 64.6%. That is the baseline. If you always ‘guess’ that the decision of the lower court will be overturned, you’d beat 50:50 every day of the week. The reason is simply that the Supreme Court decides what it wants to hear and so it is slightly more inclined to hear cases that have a decision it wishes to reverse.

Using various modelling techniques, the paper first gives an equal weight to all participants. This gives an accuracy of just 66.4% which on its own is hardly significant. However, then they add in statistics to allow for ‘following the leader’ that is giving weight in a model to those, who by some performance metric, must be in the top ‘x’ participants. Then again, adding in ‘experience’ in a further analysis, to consider those in the crowd that have made more decisions. By combining these and similar modelling techniques the headline figure of 80.8% is achieved. By adjusting the variables in the models, the paper is able to produce a large number (hundreds of thousands) of models and then model their robustness.

The data here is from the crowd itself, from real people. There are others, like a recent study by University College London which used AI and natural language processing to predict European Court of Human Rights case results with 79% accuracy by reading the facts of each case. Though as that paper states it ‘should be borne in mind when approaching [the] results’ that the facts were taken from the judgments themselves: see Aletras, Tsarapatsanis, Pretoiuc-Pietro and Lampos (question whether, when you read the very first ‘factual’ sentence of a Denning judgment for example, whether the result for the cricketers could ever have come as a great surprise: ‘In summertime village cricket is the delight of everyone.’).

"We rely on a small crowd of just 12 people to decide factual cases, what if we did that with 12 million or instead of 12 justices of the Supreme Court what if we used 1,200 lawyers?"

In the US study, there is no mass reading using AI of case papers and judgments to provide predictions. The crowd itself is relied upon with all its inherent political or other biases. The judges too, just like the crowd, are not without their biases either; they are after all political appointments. ‘In short, the decision making of the supreme court is not a random process. If you know who voted with whom in the past, they are far more likely to do so again in the future.’

In fact, each judge appointed by the president is usually known as liberal, conservative or moderate. Neil Gorsuch, the most recently appointed – by President Trump – is described as a ‘solidly conservative judge’ who has a record for ‘strictly interpreting constitution’. Gorsuch, according to SCOTUSBlog, has voted 100% of the time with conservative judge Clarence Thomas. Similarly Roberts and Kennedy vote together (centre right) and on the left, Ginsburg, Sonia and Sotomayor voted together 78% according to journalist Nina Tottenburg.

Historically, there are a large number of single majority judge decisions (eg 5 to 4) and a large number of unanimous decisions. But perhaps that is not a surprise. Are some decisions obvious to the crowd? Anecdotally, social media interactions with current or former colleagues resulted in few that ‘guessed’ the outcome of the Gina Miller challenge incorrectly even though three of our Supreme Court judges voted against the decision. (You’d expect barristers to get it right wouldn’t you, else what’s the point?)

But these are things that are not built into the model already and will, if modelled successfully, only serve to increase its accuracy.

The question is really whether we should be surprised about society predicting the results of its own Supreme Court. Successive governments are elected, after all, and justices of the Supreme Court in the US are appointed by those that are elected. Laws are passed and decided upon over many years of successive governments themselves elected by a form of constantly differing crowd.

If it’s your case, you will still need to argue it, present it, appeal it, fund it and win and if you are going to present a truly novel argument, you might need someone to come up with that argument in the first place. We rely on a small crowd of just 12 people to decide factual cases, what if we did that with 12 million or instead of 12 justices of the Supreme Court what if we used 1,200 lawyers? The Bar might be amongst the last to feel its effects but as their accuracy increases, big data and crowdsourcing are here to stay.

Within ‘legal’, an industry sector bigger than defence that could still – it appears – quite happily run on paper alone, technologies like artificial intelligence (AI) and cloud computing have finally found a permanent home.

With a blanket exception on EIS and SEIS in legal for example (tax relief for investors applicable to just about all other industries), few applicable government grants, disparate often copyrighted data (case law for example) and rigid verticals (I am an ‘X’ lawyer), the economics of even trying to solve big problems in law are difficult.

(Enter stage left) ‘crowdsourcing’, a portmanteau of ‘crowd’ and ‘outsourcing’ first coined in a 2005 Wired magazine article and perhaps best known as the method behind an online resource which enabled this sentence, at least in part – Wikipedia. A team of US academics produced a white paper in December 2017 entitled Crowdsourcing accurately and robustly predicts decisions of the Supreme Court (Katz, Bommerito and Blackman). But can we rely on crowdsourcing and how could it take over from the pedigree and reputation of the Bar?

Let’s jump straight in: think ‘Fantasy Football’. An online competition was set-up with a prize of ten thousand dollars and anyone could participate. Each predicts in a number of US Supreme Court decisions how each justice will vote. They can only do that before the decision and they can change their minds before the final result. If they are right more often than others they win the competition. Of course, no one cares about the competition, it’s just a mechanism to engage a sample set of users. The result is that over 80% of the court decisions of the Supreme Court were correctly predicted by the crowd.

Is 80% a significant number? Yes it is. Companies such as Premonition are already using ‘big data’ to predict counsel’s performance from case law and have published the names of barristers with 100% success rates in the higher courts (Lawyers by Win Rate). Will your barrister be chosen on statistics and can we now put legal cases to the popular vote? That answer depends on what exactly happened and what its potential application might be.

The paper says that the participants will exhibit some ‘selection biases’ although they come from a wide range of varied backgrounds. The website hosting the ‘FantasySOCTUS’ league gives an indication that its followers are ‘thousands of attorneys, law students, and other avid Supreme Court followers'. Indeed there are fantasy leagues at various US law schools but with just one case decided so far in 2018 (at time of writing) – everything is still to play for! (If anyone sets up a UK Supreme Court league – let me know.)

All in all, 7,284 participants took part. If you multiply 7,284 by the 425 cases studied with ten justices, you get over 30 million. In fact, the reason there are 600,000 actual data points is largely down to participants joining and leaving the competition. The average engagement level was that during each term (for ‘term’ read ‘year’) say in 2011 out of 1,336 participants, there were 82 cases, but each – on average – only decided seven. Some participants changed their minds on a justice’s decision many times, some never, but on average each changed their minds at least once. Analytically, you may be worried by this but you shouldn’t be. This is, as the paper states, a true measure of crowdsourcing generally as opposed to the performance of single ‘set’ crowd.

What we care about of course is court decisions. In the study, whilst the justice level decisions are called ‘predictions’, the case level decisions were limited to cases which strictly were able to ‘uphold’ or ‘reverse’ the lower court’s decision.

If you’ve challenged expert statistics before you might inherently feel that there is some in-built baseline and that cases aren’t just ‘half upheld’ and ‘half reversed’ (those which didn’t fit this binary analysis, for example if they settled, were excluded). And you’d be right. At a case level this is 64.6%. That is the baseline. If you always ‘guess’ that the decision of the lower court will be overturned, you’d beat 50:50 every day of the week. The reason is simply that the Supreme Court decides what it wants to hear and so it is slightly more inclined to hear cases that have a decision it wishes to reverse.

Using various modelling techniques, the paper first gives an equal weight to all participants. This gives an accuracy of just 66.4% which on its own is hardly significant. However, then they add in statistics to allow for ‘following the leader’ that is giving weight in a model to those, who by some performance metric, must be in the top ‘x’ participants. Then again, adding in ‘experience’ in a further analysis, to consider those in the crowd that have made more decisions. By combining these and similar modelling techniques the headline figure of 80.8% is achieved. By adjusting the variables in the models, the paper is able to produce a large number (hundreds of thousands) of models and then model their robustness.

The data here is from the crowd itself, from real people. There are others, like a recent study by University College London which used AI and natural language processing to predict European Court of Human Rights case results with 79% accuracy by reading the facts of each case. Though as that paper states it ‘should be borne in mind when approaching [the] results’ that the facts were taken from the judgments themselves: see Aletras, Tsarapatsanis, Pretoiuc-Pietro and Lampos (question whether, when you read the very first ‘factual’ sentence of a Denning judgment for example, whether the result for the cricketers could ever have come as a great surprise: ‘In summertime village cricket is the delight of everyone.’).

"We rely on a small crowd of just 12 people to decide factual cases, what if we did that with 12 million or instead of 12 justices of the Supreme Court what if we used 1,200 lawyers?"

In the US study, there is no mass reading using AI of case papers and judgments to provide predictions. The crowd itself is relied upon with all its inherent political or other biases. The judges too, just like the crowd, are not without their biases either; they are after all political appointments. ‘In short, the decision making of the supreme court is not a random process. If you know who voted with whom in the past, they are far more likely to do so again in the future.’

In fact, each judge appointed by the president is usually known as liberal, conservative or moderate. Neil Gorsuch, the most recently appointed – by President Trump – is described as a ‘solidly conservative judge’ who has a record for ‘strictly interpreting constitution’. Gorsuch, according to SCOTUSBlog, has voted 100% of the time with conservative judge Clarence Thomas. Similarly Roberts and Kennedy vote together (centre right) and on the left, Ginsburg, Sonia and Sotomayor voted together 78% according to journalist Nina Tottenburg.

Historically, there are a large number of single majority judge decisions (eg 5 to 4) and a large number of unanimous decisions. But perhaps that is not a surprise. Are some decisions obvious to the crowd? Anecdotally, social media interactions with current or former colleagues resulted in few that ‘guessed’ the outcome of the Gina Miller challenge incorrectly even though three of our Supreme Court judges voted against the decision. (You’d expect barristers to get it right wouldn’t you, else what’s the point?)

But these are things that are not built into the model already and will, if modelled successfully, only serve to increase its accuracy.

The question is really whether we should be surprised about society predicting the results of its own Supreme Court. Successive governments are elected, after all, and justices of the Supreme Court in the US are appointed by those that are elected. Laws are passed and decided upon over many years of successive governments themselves elected by a form of constantly differing crowd.

If it’s your case, you will still need to argue it, present it, appeal it, fund it and win and if you are going to present a truly novel argument, you might need someone to come up with that argument in the first place. We rely on a small crowd of just 12 people to decide factual cases, what if we did that with 12 million or instead of 12 justices of the Supreme Court what if we used 1,200 lawyers? The Bar might be amongst the last to feel its effects but as their accuracy increases, big data and crowdsourcing are here to stay.

Crowdsourcing court decisions? Will it ever take over from the pedigree and reputation of the Bar? By Simon Gittins

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

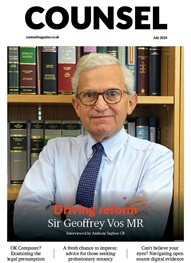

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts