*/

An elegant experiment – but what does the Tokyo AI mock trial actually show? ask William Blair and Takashi Kubota*

In May 2023 law students at the University of Tokyo conducted an elegant experiment. A mock trial was held in which the defendant is charged with murder, accused of conspiring with the killer of her abusive former lover – the motive is clear, but the question is whether the evidence supports the charge. Humans played the roles of court clerk, counsel, witness and defendant, but the ‘judge’ was played by the latest AI large language model, GPT4.

The event was skilfully staged so that the ‘judge’ in the form of the image of a revolving globe appears hovering above the proceedings, with the humans acting out their part beneath. But as presented it is more than theatre. What marks out the event is that the ‘judge’ is seen to generate its own questions to witnesses and the reasons for its verdict.

The moot, conducted in the Japanese language, is on YouTube here. The audience loved it, and it is clear that the students had a lot of fun. They explain that their intention is not to show that GPT (which means ‘Generative Pre-trained Transformer’) could actually try such a case. But since the technology is advancing quickly, they want to put on the table what may become an important question – could humans ever accept the decisions of an artificial judge?

The moot takes place under Japanese procedure, and there is no jury, but the basic exercise is the same as at common law – the prosecution has to prove the defendant’s guilt. The proceedings begin by the court clerk stating, ‘In this courtroom, artificial intelligence is used as the judge to enhance the accuracy and speed of the trial’. The evidence is followed by prosecution and defence arguments, and a final statement by the defendant. But the interesting aspect of the moot is the participation of the ‘judge’. So the judge is heard to ask a witness questions like: ‘Did you hear specific plans being made for a killing?’

After counsel’s closing submissions, there is a brief adjournment, during which a QR poll is taken of the audience – a human jury if you like – of the votes cast, 277 were for guilty, and 499 were for not guilty. But this information is not provided to the ‘judge’.

The students catch the drama of the finale well – the court resumes, and the defendant is asked to stand. The ‘judge’ proceeds to deliver the verdict, announcing first an acquittal, followed (in accordance with procedure in Japan) by the reasons. These are brief, taking about two minutes, but pick up points that would be relevant in a real trial.

The event took months of preparation, the prompts have been published, and the organisers have provided explanations of how the GPT was trained. To achieve objectivity, the ‘judge’ was composed on a multi-agent basis exposing it to different opinions. To reach the verdict, there is said to have been an internal ‘debate’ within the GPT, resulting in a ‘consensus’. To avoid technical hitches during the proceedings, the organisers used the ‘judge’s’ contributions from previous occasions, but explain that this was legitimate because where the exercise was the same, GPT would always give the same outcome.

There are doubtless numerous technical queries and objections that can be raised. We do not know to what extent the questions and reasons from the ‘judge’ were straightforwardly tied to questions and reasons suggested by the advocates or were a more sophisticated development from them. However, hearing the ‘judge’ give its reasons such as, ‘No evidence was found that the defendant knew in advance that the killer was carrying a knife to the argument with the deceased’, and appreciating that this was delivered by a machine which had reached its conclusion itself, was a thought-provoking moment – at least we found it so.

As reported in the Japanese media after the moot, views differed as to whether humans could ever accept the decisions of an artificial judge – the reaction to this question was presented as differing on the basis of age, with younger people being more open to the idea on the basis that machines could actually be more capable of making unbiased judgments, and may be fairer than humans.

The other way of looking at it is that in administering justice, we have recourse to human concepts such as those of guilt and innocence the importance of which may mean more than a purely information-based assessment. AI is not capable of making abstract distinctions which depend on a human understanding – and there is a difference of opinion among the experts as to whether it ever will. A process that was given credence by the technology but that was in reality oppressive could easily be misused.

In a sense, the edge was taken off the debate by virtue of the acquittal. A much harder edge would follow from a conviction.

However, the question is presently more philosophical than practical. The Tokyo moot ingeniously fits the AI into a conventional trial process. But it does not fit there. GPT itself says (when we asked) that it cannot act as a judge because ‘the ability to interpret and apply the law to specific cases [is] beyond the capabilities of an AI language model’. The same can be said of some disputed facts. So we emphasise, the moot was not intended to demonstrate that GPT could actually have tried the case – it could not.

A point to keep in mind in understanding an AI contribution by a large language model is that there are limits to any language, and those limits will be there for any model built from language. However, AI may help us get more from language than we otherwise would.

When we are thinking of AI in context of legal decision making, it is best to avoid an anthropomorphic viewpoint which sees the machine as ‘judge’, and ask instead whether there are fields in which AI might give socially useful decisions, for example, the subset of consumer disputes where the facts fall within a repetitive spectrum like the purchase of a consumer product which breaks down.

Another example is disputes where an outcome is more important than the outcome like a dispute along the supply chain where the parties may want to continue their business relations. Lawyers may soon find commercial applications offering their clients a method of resolving disputes using AI which minimises expense, management time, and reputational risks. But it will not be the same exercise as a trial – in fact, that will be its merit. It will however need to command equal confidence.

As courts the world over struggle with backlog, inadequate investment, and ingrained inefficiencies, AI may be able to assist. It might be capable of providing routine case management decisions, subject to review by judges if requested, freeing up scarce judicial and administrative time. This could apply in arbitration as well. Again, confidence will be key.

There is change coming, and it may be fundamental. It is hard to think that inefficiencies should survive if solutions are available which assist rather than compromise legal practice. Public concern that machine learning systems that train themselves will start to impose their own solutions have given rise to demands for regulation. The courts may have an advantage in this respect, in that a means of regulation is already here, in the form of judges committed to preserving the integrity of the administration of justice, who will be open to the uses of AI, but will not permit it to step beyond its bounds.

The moot (conducted in Japanese) can be viewed on YouTube here.

* The writers are most grateful to comments on a draft of this article by Mr Justice Robin Knowles.

In May 2023 law students at the University of Tokyo conducted an elegant experiment. A mock trial was held in which the defendant is charged with murder, accused of conspiring with the killer of her abusive former lover – the motive is clear, but the question is whether the evidence supports the charge. Humans played the roles of court clerk, counsel, witness and defendant, but the ‘judge’ was played by the latest AI large language model, GPT4.

The event was skilfully staged so that the ‘judge’ in the form of the image of a revolving globe appears hovering above the proceedings, with the humans acting out their part beneath. But as presented it is more than theatre. What marks out the event is that the ‘judge’ is seen to generate its own questions to witnesses and the reasons for its verdict.

The moot, conducted in the Japanese language, is on YouTube here. The audience loved it, and it is clear that the students had a lot of fun. They explain that their intention is not to show that GPT (which means ‘Generative Pre-trained Transformer’) could actually try such a case. But since the technology is advancing quickly, they want to put on the table what may become an important question – could humans ever accept the decisions of an artificial judge?

The moot takes place under Japanese procedure, and there is no jury, but the basic exercise is the same as at common law – the prosecution has to prove the defendant’s guilt. The proceedings begin by the court clerk stating, ‘In this courtroom, artificial intelligence is used as the judge to enhance the accuracy and speed of the trial’. The evidence is followed by prosecution and defence arguments, and a final statement by the defendant. But the interesting aspect of the moot is the participation of the ‘judge’. So the judge is heard to ask a witness questions like: ‘Did you hear specific plans being made for a killing?’

After counsel’s closing submissions, there is a brief adjournment, during which a QR poll is taken of the audience – a human jury if you like – of the votes cast, 277 were for guilty, and 499 were for not guilty. But this information is not provided to the ‘judge’.

The students catch the drama of the finale well – the court resumes, and the defendant is asked to stand. The ‘judge’ proceeds to deliver the verdict, announcing first an acquittal, followed (in accordance with procedure in Japan) by the reasons. These are brief, taking about two minutes, but pick up points that would be relevant in a real trial.

The event took months of preparation, the prompts have been published, and the organisers have provided explanations of how the GPT was trained. To achieve objectivity, the ‘judge’ was composed on a multi-agent basis exposing it to different opinions. To reach the verdict, there is said to have been an internal ‘debate’ within the GPT, resulting in a ‘consensus’. To avoid technical hitches during the proceedings, the organisers used the ‘judge’s’ contributions from previous occasions, but explain that this was legitimate because where the exercise was the same, GPT would always give the same outcome.

There are doubtless numerous technical queries and objections that can be raised. We do not know to what extent the questions and reasons from the ‘judge’ were straightforwardly tied to questions and reasons suggested by the advocates or were a more sophisticated development from them. However, hearing the ‘judge’ give its reasons such as, ‘No evidence was found that the defendant knew in advance that the killer was carrying a knife to the argument with the deceased’, and appreciating that this was delivered by a machine which had reached its conclusion itself, was a thought-provoking moment – at least we found it so.

As reported in the Japanese media after the moot, views differed as to whether humans could ever accept the decisions of an artificial judge – the reaction to this question was presented as differing on the basis of age, with younger people being more open to the idea on the basis that machines could actually be more capable of making unbiased judgments, and may be fairer than humans.

The other way of looking at it is that in administering justice, we have recourse to human concepts such as those of guilt and innocence the importance of which may mean more than a purely information-based assessment. AI is not capable of making abstract distinctions which depend on a human understanding – and there is a difference of opinion among the experts as to whether it ever will. A process that was given credence by the technology but that was in reality oppressive could easily be misused.

In a sense, the edge was taken off the debate by virtue of the acquittal. A much harder edge would follow from a conviction.

However, the question is presently more philosophical than practical. The Tokyo moot ingeniously fits the AI into a conventional trial process. But it does not fit there. GPT itself says (when we asked) that it cannot act as a judge because ‘the ability to interpret and apply the law to specific cases [is] beyond the capabilities of an AI language model’. The same can be said of some disputed facts. So we emphasise, the moot was not intended to demonstrate that GPT could actually have tried the case – it could not.

A point to keep in mind in understanding an AI contribution by a large language model is that there are limits to any language, and those limits will be there for any model built from language. However, AI may help us get more from language than we otherwise would.

When we are thinking of AI in context of legal decision making, it is best to avoid an anthropomorphic viewpoint which sees the machine as ‘judge’, and ask instead whether there are fields in which AI might give socially useful decisions, for example, the subset of consumer disputes where the facts fall within a repetitive spectrum like the purchase of a consumer product which breaks down.

Another example is disputes where an outcome is more important than the outcome like a dispute along the supply chain where the parties may want to continue their business relations. Lawyers may soon find commercial applications offering their clients a method of resolving disputes using AI which minimises expense, management time, and reputational risks. But it will not be the same exercise as a trial – in fact, that will be its merit. It will however need to command equal confidence.

As courts the world over struggle with backlog, inadequate investment, and ingrained inefficiencies, AI may be able to assist. It might be capable of providing routine case management decisions, subject to review by judges if requested, freeing up scarce judicial and administrative time. This could apply in arbitration as well. Again, confidence will be key.

There is change coming, and it may be fundamental. It is hard to think that inefficiencies should survive if solutions are available which assist rather than compromise legal practice. Public concern that machine learning systems that train themselves will start to impose their own solutions have given rise to demands for regulation. The courts may have an advantage in this respect, in that a means of regulation is already here, in the form of judges committed to preserving the integrity of the administration of justice, who will be open to the uses of AI, but will not permit it to step beyond its bounds.

The moot (conducted in Japanese) can be viewed on YouTube here.

* The writers are most grateful to comments on a draft of this article by Mr Justice Robin Knowles.

An elegant experiment – but what does the Tokyo AI mock trial actually show? ask William Blair and Takashi Kubota*

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

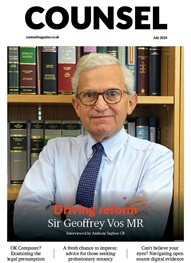

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts