*/

Subjecting tech colossi to the rule of law while defending expressive freedoms online is a formidable task legislators have barely begun – but post-Cambridge Analytica change is in the air, writes David Anderson QC

This February saw the death of Wyoming-born John Perry Barlow, Grateful Dead lyricist and libertarian visionary.

In 1996, Barlow famously expressed the pioneering philosophy of the internet in his Declaration of the Independence of Cyberspace:

‘Governments of the Industrial World, you weary giants of flesh and steel… You are not welcome among us. You have no sovereignty where we gather…

‘We are forming our own Social Contract. This governance will arise according to the conditions of our world, not yours. Our world is different.’

Influential as they have been, there are signs that the currency of those words may not long outlive their charismatic author.

The weary giants of flesh and steel remain uncertain of their place in cyberspace. But they have been joined there by new and more vigorous colossi. The huge tech companies that have sprung up in the years since Barlow’s Declaration provide their services in a borderless digital world that no government can emulate, and few have dared to constrain. Their wealth is based on vast quantities of personal data – information that we freely supply to them every time we disclose our interests and desires in an eBay search, a Facebook like or a Gmail.

All who venture online can benefit from the remarkable connectivity that these companies provide. But their largely unlimited power to accumulate, aggregate and analyse our data enables them to target advertising with an accuracy that leaves traditional media struggling to compete. That access to personal data affords the largest internet companies immense power, both commercial and – should they choose to use it – political.

For some, the significance of the tech colossi lies in their ability to help us bypass or at least reduce the footprint of government. Why should buyer and seller have to resort to a real-world, state-run small claims court, when the eBay Resolution Centre – applying algorithms known only to itself – quickly and cheaply resolves 60 million disputes annually? Why need public authorities concern themselves with the standard of holiday lets or the licensing of vehicles for private hire, when the likes of AirBnB and Uber can not only connect individual users and providers, but provide specific feedback on each?

For others, the likes of Facebook’s Mark Zuckerberg and Google’s Sergey Brin are more aptly compared to Carnegie and Rockefeller: industrialists who extracted resources (iron ore and oil in the 19th century, data in the 21st), crushed competitors, leveraged their power downstream and achieved wealth unprecedented in human history. In 1911 the new anti-trust laws eventually caught up with Rockefeller’s Standard Oil. This time around, the impotence of democratic governments in the face of the tech colossi is evident from their ineffective attempts to tax them and (with limited exceptions, led globally by the European Union) to control the use they make of our data.

Conflicting perceptions of internet platforms as enablers of personal liberty on the one hand and irresponsible monopolists on the other play out in a number of areas, including those just mentioned. But just as important is the issue of content regulation. For Barlow, the internet was ‘creating a world where anyone, anywhere may express his or her beliefs, no matter how singular, without fear of being coerced into silence or conformity’. Such ideals retain a strong appeal in Silicon Valley, particularly in the start-up culture where First Amendment freedoms burn brightly, user anonymity is prized and trust in government is low. But the spreading of nefarious material via the internet has provoked more interventionist tendencies, especially in Europe but also in the USA.

It is barely six years since Twitter described itself, in the UK, as ‘the free speech wing of the free speech party’. But a number of forces have come together to change the mood. Politicians credibly accuse internet platforms (or intermediaries) of facilitating terrorism and sexual exploitation. Advertisers see their brands displayed next to extreme or unsavoury content, and threaten to withdraw their custom. Old media, smarting from the loss of advertising to Google and Facebook, demand regulation equivalent to that which they themselves face.

The current state of content regulation is rudimentary, fractured and – it seems fair to assume – transitional. A sense of it may be gained from the regular evidence sessions, available online, in which the large intermediaries are called to account by Parliament’s Home Affairs Select Committee. Facebook, Twitter and YouTube speak of their high standards, their terms of service and their internal guidelines. They claim credit for recruiting human moderators, for devising automated techniques to detect harmful content, and for suspending and deleting ever-larger numbers of accounts. The Committee in turn criticises them for lack of transparency, for the patchy and inconsistent application of their standards and for their unwillingness to volunteer information that could help law enforcement to prevent terrorist incidents. Outside observers note the sub-optimal nature of a system under which content rules are devised in an ad hoc manner by private companies, under pressure from political and commercial interests and without public debate or visibility.

The essence of current practice is its reactivity. It operates not on the basis that intermediaries are responsible for the content posted by others on their platforms, but on a ‘report and takedown’ basis. Harmful material is notified to the intermediary, by a member of the public or perhaps by a ‘trusted flagger’ such as CTIRU, the internet referral unit operated by Counter-Terrorism Policing. The intermediary applies its own guidelines and deletes the material if it is found to contravene them.

Even the German Network Enforcement Act of 2017 – the strongest and most controversial content law yet attempted in Europe – follows the reactive model. Under that Act, intermediaries may face fines of up to 50 million euros for failure to take down plainly illegal material within 24 hours of a complaint being received. When the Home Affairs Select Committee suggested last year that intermediaries might be asked to defray the costs of CTIRU, rather as a football club picks up policing costs on a match day, it was similarly working with the reactive grain.

"When even Zuckerberg says he would welcome more regulation of online political advertising, as he did in the wake of the Cambridge Analytica revelations, it seems fair to assume change is on the way"

Those who push for intermediaries to be labelled not as platforms but as publishers would like to make them responsible for the transmission of illegal content, in the manner of print outlets or broadcasters. But they are prevented from this proactive approach by law: s 230 of the 1996 Communications Decency Act in the USA, and Article 15(1) of the 2000 e-Commerce Directive in the EU. While intermediaries continue to be urged to take more responsibility, the latter provision has so far prevented the ‘report and takedown’ model from evolving in the UK into a more general duty on intermediaries to police their own platforms.

Article 15(1) has survived the growth of the tech colossi, providing a tangible link to the founding, free-speech philosophy of the internet. To replace it by a series of duties on intermediaries to police their own platforms would cause difficulties in practice as well as in principle. With 300 hours of video uploaded to YouTube every minute, the volume of online material manifestly exceeds the capacity for human moderation. Yet the alternative – automated censors relying on artificial intelligence – may lack the ability to spot irony and humour, to identify false or malicious reporting and to distinguish academic commentary from terrorist recruitment. Intermediary liability will incentivise caution, causing valuable expression to be blocked. So freedom-minded people prefer less prescriptive options: most agreeably, the offering of ‘counter-speech’ in the marketplace of ideas where, according to John Stuart Mill and his followers in the US Supreme Court, the good may be counted upon to drive out the bad.

But a functioning marketplace of ideas depends on its participants placing the highest value on what is good and true. The phenomena of fake news and online harassment suggest that many of us prefer, on the contrary, what is sensational, bias-confirming, discriminatory and false. Research recently published in Science Magazine concluded that over a ten-year period, falsehoods on Twitter travelled ‘significantly farther, faster, deeper, and more broadly than the truth, in all categories of information, and in many cases by an order of magnitude’. This looks like market failure, which in the context of political speech may threaten even the proper functioning of democracy. The correct liberal response is education in critical thinking. But as in other cases of market failure, regulation may also be required.

The large-scale use of the internet for illicit purposes ranging from copyright infringement to child sexual exploitation is already chipping away at free-speech protections such as Article 15(1). In the 2015 case of Delfi v Estonia, the European Court of Human Rights upheld the imposition of liability on a news portal which had taken six weeks to remove offensive reader comments, implying an obligation to monitor such user-generated content. In March 2018, the EU Commission – echoing an earlier Franco-British Action Plan – published a Recommendation which exhorts companies to use proactive tools to detect and remove illegal content, particularly in relation to terrorism, child sexual abuse and counterfeited goods. Some large companies, encouraged by the authorities, are already moving in that direction.

The Committee for Standards in Public Life recommended recently that intermediaries should be made liable for the publication of unlawful material posted on their platforms. A further step would be an Ofcom-style model of regulation complete with transparency obligations, codes of conduct and sanctions for their breach. Interest in such a model has been expressed by the licensed broadcaster Sky. Itself subject to Ofcom’s Broadcast Code, it complains (self-interestedly, but with some force) of the incongruity in applying different standards to the broadcast and internet content that are increasingly tending to converge on the same screen.

Until recently, the mainstream view has been that it is better for government to work with the tech colossi than to compel them to monitor their platforms for illegal words and images. But events move fast. Matt Hancock MP, the Digital Minister, now talks about the internet in terms of taming the Wild West. When even Mark Zuckerberg says that he would welcome more regulation of online political advertising, as he did in the wake of the Cambridge Analytica revelations, it seems fair to assume that change is on the way.

Hancock’s Shadow, Liam Byrne MP, has pertinently commented that for all the benefits brought by the last Industrial Revolution, it took numerous Factories Acts over the course of more than a century before its worst excesses were curbed. Controversy, wrong turnings and failures of imagination are similarly to be expected in the conceptually more complex task of determining how far and in what way the current information revolution is to be governed.

The weary giants of flesh and steel should not abandon the fray, but adapt their role to the new online realities. This means subjecting the tech colossi to the rule of law, while defending our expressive freedoms in their turbocharged online form. Good lawyers must be central to this tricky but inspiring endeavour. We should wish them well.

Contributor David Anderson QC practises from Brick Court Chambers with an emphasis on EU-related and public law. Between 2011 and 2017 he served as the UK’s Independent Reviewer of Terrorism Legislation. @bricksilk

This February saw the death of Wyoming-born John Perry Barlow, Grateful Dead lyricist and libertarian visionary.

In 1996, Barlow famously expressed the pioneering philosophy of the internet in his Declaration of the Independence of Cyberspace:

‘Governments of the Industrial World, you weary giants of flesh and steel… You are not welcome among us. You have no sovereignty where we gather…

‘We are forming our own Social Contract. This governance will arise according to the conditions of our world, not yours. Our world is different.’

Influential as they have been, there are signs that the currency of those words may not long outlive their charismatic author.

The weary giants of flesh and steel remain uncertain of their place in cyberspace. But they have been joined there by new and more vigorous colossi. The huge tech companies that have sprung up in the years since Barlow’s Declaration provide their services in a borderless digital world that no government can emulate, and few have dared to constrain. Their wealth is based on vast quantities of personal data – information that we freely supply to them every time we disclose our interests and desires in an eBay search, a Facebook like or a Gmail.

All who venture online can benefit from the remarkable connectivity that these companies provide. But their largely unlimited power to accumulate, aggregate and analyse our data enables them to target advertising with an accuracy that leaves traditional media struggling to compete. That access to personal data affords the largest internet companies immense power, both commercial and – should they choose to use it – political.

For some, the significance of the tech colossi lies in their ability to help us bypass or at least reduce the footprint of government. Why should buyer and seller have to resort to a real-world, state-run small claims court, when the eBay Resolution Centre – applying algorithms known only to itself – quickly and cheaply resolves 60 million disputes annually? Why need public authorities concern themselves with the standard of holiday lets or the licensing of vehicles for private hire, when the likes of AirBnB and Uber can not only connect individual users and providers, but provide specific feedback on each?

For others, the likes of Facebook’s Mark Zuckerberg and Google’s Sergey Brin are more aptly compared to Carnegie and Rockefeller: industrialists who extracted resources (iron ore and oil in the 19th century, data in the 21st), crushed competitors, leveraged their power downstream and achieved wealth unprecedented in human history. In 1911 the new anti-trust laws eventually caught up with Rockefeller’s Standard Oil. This time around, the impotence of democratic governments in the face of the tech colossi is evident from their ineffective attempts to tax them and (with limited exceptions, led globally by the European Union) to control the use they make of our data.

Conflicting perceptions of internet platforms as enablers of personal liberty on the one hand and irresponsible monopolists on the other play out in a number of areas, including those just mentioned. But just as important is the issue of content regulation. For Barlow, the internet was ‘creating a world where anyone, anywhere may express his or her beliefs, no matter how singular, without fear of being coerced into silence or conformity’. Such ideals retain a strong appeal in Silicon Valley, particularly in the start-up culture where First Amendment freedoms burn brightly, user anonymity is prized and trust in government is low. But the spreading of nefarious material via the internet has provoked more interventionist tendencies, especially in Europe but also in the USA.

It is barely six years since Twitter described itself, in the UK, as ‘the free speech wing of the free speech party’. But a number of forces have come together to change the mood. Politicians credibly accuse internet platforms (or intermediaries) of facilitating terrorism and sexual exploitation. Advertisers see their brands displayed next to extreme or unsavoury content, and threaten to withdraw their custom. Old media, smarting from the loss of advertising to Google and Facebook, demand regulation equivalent to that which they themselves face.

The current state of content regulation is rudimentary, fractured and – it seems fair to assume – transitional. A sense of it may be gained from the regular evidence sessions, available online, in which the large intermediaries are called to account by Parliament’s Home Affairs Select Committee. Facebook, Twitter and YouTube speak of their high standards, their terms of service and their internal guidelines. They claim credit for recruiting human moderators, for devising automated techniques to detect harmful content, and for suspending and deleting ever-larger numbers of accounts. The Committee in turn criticises them for lack of transparency, for the patchy and inconsistent application of their standards and for their unwillingness to volunteer information that could help law enforcement to prevent terrorist incidents. Outside observers note the sub-optimal nature of a system under which content rules are devised in an ad hoc manner by private companies, under pressure from political and commercial interests and without public debate or visibility.

The essence of current practice is its reactivity. It operates not on the basis that intermediaries are responsible for the content posted by others on their platforms, but on a ‘report and takedown’ basis. Harmful material is notified to the intermediary, by a member of the public or perhaps by a ‘trusted flagger’ such as CTIRU, the internet referral unit operated by Counter-Terrorism Policing. The intermediary applies its own guidelines and deletes the material if it is found to contravene them.

Even the German Network Enforcement Act of 2017 – the strongest and most controversial content law yet attempted in Europe – follows the reactive model. Under that Act, intermediaries may face fines of up to 50 million euros for failure to take down plainly illegal material within 24 hours of a complaint being received. When the Home Affairs Select Committee suggested last year that intermediaries might be asked to defray the costs of CTIRU, rather as a football club picks up policing costs on a match day, it was similarly working with the reactive grain.

"When even Zuckerberg says he would welcome more regulation of online political advertising, as he did in the wake of the Cambridge Analytica revelations, it seems fair to assume change is on the way"

Those who push for intermediaries to be labelled not as platforms but as publishers would like to make them responsible for the transmission of illegal content, in the manner of print outlets or broadcasters. But they are prevented from this proactive approach by law: s 230 of the 1996 Communications Decency Act in the USA, and Article 15(1) of the 2000 e-Commerce Directive in the EU. While intermediaries continue to be urged to take more responsibility, the latter provision has so far prevented the ‘report and takedown’ model from evolving in the UK into a more general duty on intermediaries to police their own platforms.

Article 15(1) has survived the growth of the tech colossi, providing a tangible link to the founding, free-speech philosophy of the internet. To replace it by a series of duties on intermediaries to police their own platforms would cause difficulties in practice as well as in principle. With 300 hours of video uploaded to YouTube every minute, the volume of online material manifestly exceeds the capacity for human moderation. Yet the alternative – automated censors relying on artificial intelligence – may lack the ability to spot irony and humour, to identify false or malicious reporting and to distinguish academic commentary from terrorist recruitment. Intermediary liability will incentivise caution, causing valuable expression to be blocked. So freedom-minded people prefer less prescriptive options: most agreeably, the offering of ‘counter-speech’ in the marketplace of ideas where, according to John Stuart Mill and his followers in the US Supreme Court, the good may be counted upon to drive out the bad.

But a functioning marketplace of ideas depends on its participants placing the highest value on what is good and true. The phenomena of fake news and online harassment suggest that many of us prefer, on the contrary, what is sensational, bias-confirming, discriminatory and false. Research recently published in Science Magazine concluded that over a ten-year period, falsehoods on Twitter travelled ‘significantly farther, faster, deeper, and more broadly than the truth, in all categories of information, and in many cases by an order of magnitude’. This looks like market failure, which in the context of political speech may threaten even the proper functioning of democracy. The correct liberal response is education in critical thinking. But as in other cases of market failure, regulation may also be required.

The large-scale use of the internet for illicit purposes ranging from copyright infringement to child sexual exploitation is already chipping away at free-speech protections such as Article 15(1). In the 2015 case of Delfi v Estonia, the European Court of Human Rights upheld the imposition of liability on a news portal which had taken six weeks to remove offensive reader comments, implying an obligation to monitor such user-generated content. In March 2018, the EU Commission – echoing an earlier Franco-British Action Plan – published a Recommendation which exhorts companies to use proactive tools to detect and remove illegal content, particularly in relation to terrorism, child sexual abuse and counterfeited goods. Some large companies, encouraged by the authorities, are already moving in that direction.

The Committee for Standards in Public Life recommended recently that intermediaries should be made liable for the publication of unlawful material posted on their platforms. A further step would be an Ofcom-style model of regulation complete with transparency obligations, codes of conduct and sanctions for their breach. Interest in such a model has been expressed by the licensed broadcaster Sky. Itself subject to Ofcom’s Broadcast Code, it complains (self-interestedly, but with some force) of the incongruity in applying different standards to the broadcast and internet content that are increasingly tending to converge on the same screen.

Until recently, the mainstream view has been that it is better for government to work with the tech colossi than to compel them to monitor their platforms for illegal words and images. But events move fast. Matt Hancock MP, the Digital Minister, now talks about the internet in terms of taming the Wild West. When even Mark Zuckerberg says that he would welcome more regulation of online political advertising, as he did in the wake of the Cambridge Analytica revelations, it seems fair to assume that change is on the way.

Hancock’s Shadow, Liam Byrne MP, has pertinently commented that for all the benefits brought by the last Industrial Revolution, it took numerous Factories Acts over the course of more than a century before its worst excesses were curbed. Controversy, wrong turnings and failures of imagination are similarly to be expected in the conceptually more complex task of determining how far and in what way the current information revolution is to be governed.

The weary giants of flesh and steel should not abandon the fray, but adapt their role to the new online realities. This means subjecting the tech colossi to the rule of law, while defending our expressive freedoms in their turbocharged online form. Good lawyers must be central to this tricky but inspiring endeavour. We should wish them well.

Contributor David Anderson QC practises from Brick Court Chambers with an emphasis on EU-related and public law. Between 2011 and 2017 he served as the UK’s Independent Reviewer of Terrorism Legislation. @bricksilk

Subjecting tech colossi to the rule of law while defending expressive freedoms online is a formidable task legislators have barely begun – but post-Cambridge Analytica change is in the air, writes David Anderson QC

The Chair of the Bar sets out how the new government can restore the justice system

In the first of a new series, Louise Crush of Westgate Wealth considers the fundamental need for financial protection

Unlocking your aged debt to fund your tax in one easy step. By Philip N Bristow

Possibly, but many barristers are glad he did…

Mental health charity Mind BWW has received a £500 donation from drug, alcohol and DNA testing laboratory, AlphaBiolabs as part of its Giving Back campaign

The Institute of Neurotechnology & Law is thrilled to announce its inaugural essay competition

How to navigate open source evidence in an era of deepfakes. By Professor Yvonne McDermott Rees and Professor Alexa Koenig

Brie Stevens-Hoare KC and Lyndsey de Mestre KC take a look at the difficulties women encounter during the menopause, and offer some practical tips for individuals and chambers to make things easier

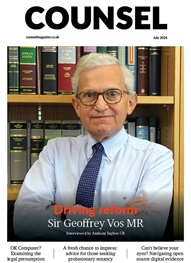

Sir Geoffrey Vos, Master of the Rolls and Head of Civil Justice since January 2021, is well known for his passion for access to justice and all things digital. Perhaps less widely known is the driven personality and wanderlust that lies behind this, as Anthony Inglese CB discovers

The Chair of the Bar sets out how the new government can restore the justice system

No-one should have to live in sub-standard accommodation, says Antony Hodari Solicitors. We are tackling the problem of bad housing with a two-pronged approach and act on behalf of tenants in both the civil and criminal courts